High-performance machine learning

Project reference: 2001

The goal of the project is to demonstrate that HPC tools are (at least) as good, or better, for big data processing as the popular JVM-based technologies, such as the Hadoop MapReduce or Apache Spark. The performance (in terms of floating-point operations per second) itself is clearly not the only metrics to judge. We must also address other aspects, for which the traditional big data frameworks shine, i.e. the runtime resilience and parallel processing of distributed data.

The runtime resilience of parallel HPC applications is a vivid research field for quite a few years and several approaches are now application ready. We plan to use GPI-2 API (http://www.gpi-site.com/gpi2) implementing GASPI (Global Address Space Programming Interface) specification, which offers, among other appealing features (asynchronous data flow, etc.), mechanisms to react to failures.

The parallel, distributed data processing in the traditional big data world is made possible using special filesystems, such as the Hadoop file system (HDFS) or analogues. HDFS enables data processing using the information of data locality, i.e. to process data that is “physically” located on the compute node, without the need for data transfer over the network. Despite it’s the many advantages, HDFS is not particularly suitable for deployment on HPC facilities / supercomputers and use with C/C++ or Fortran MPI codes, for several reasons. Within the project we plan to explore other possibilities (in-memory storage / NVRAM, and/or multi-level architectures, etc.) and search for the most suitable alternative to HDFS.

Having a powerful set of tools for big data processing and high-performance data analytics (HDPA) built using HPC tools and compatible with HPC environments, is highly desirable, because of growing demand for such tasks on supercomputer facilities.

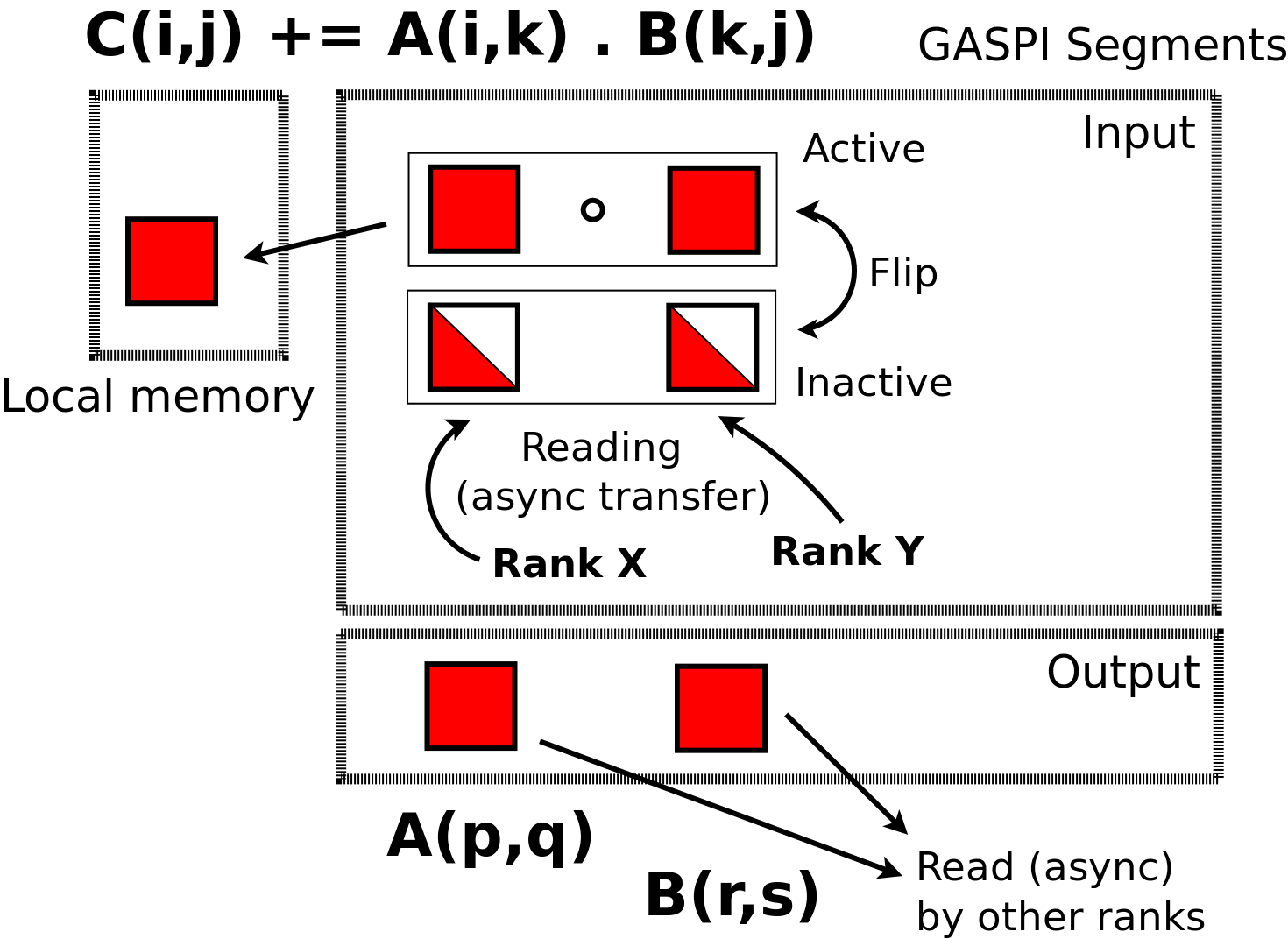

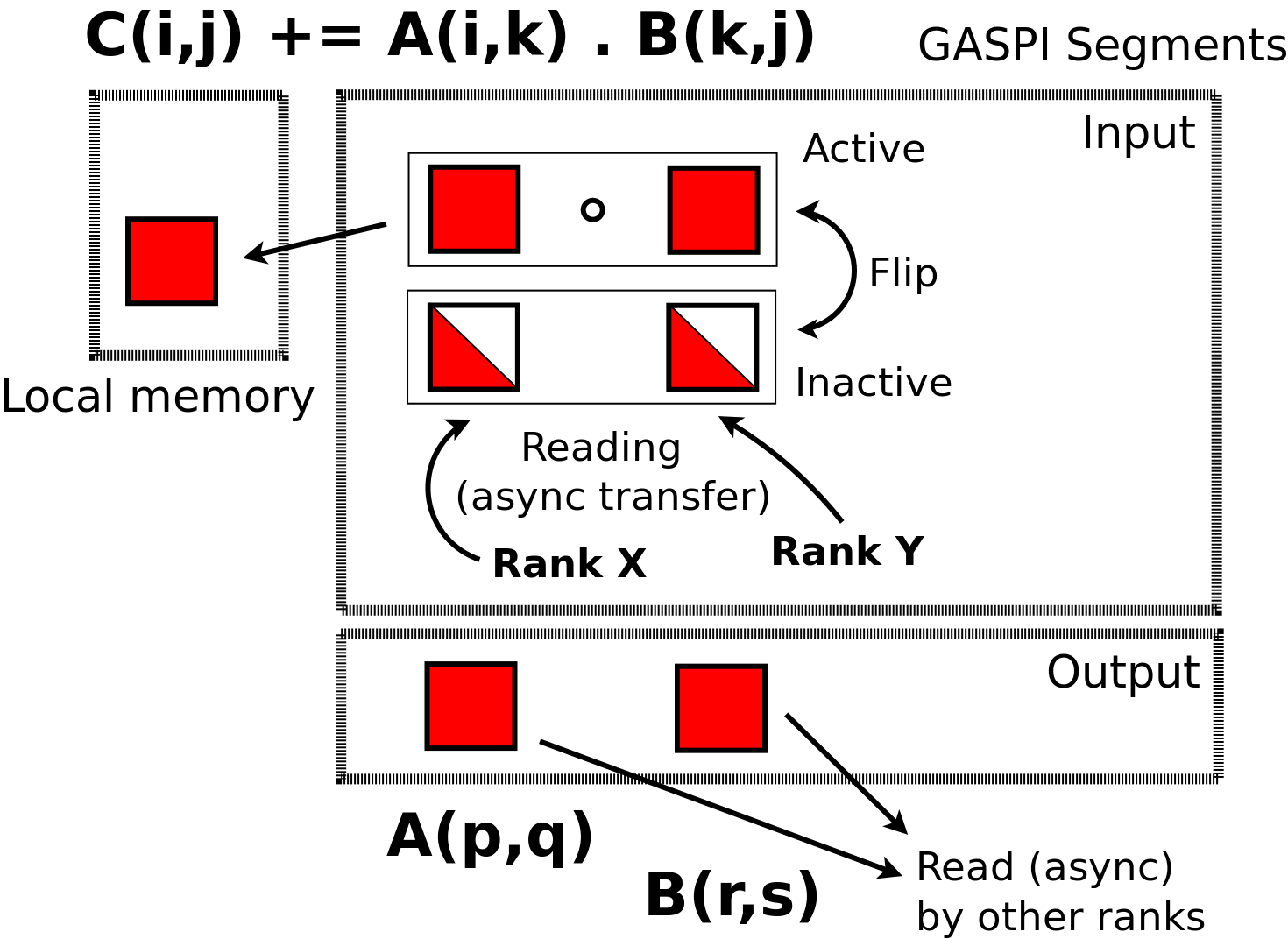

Schema of GASPI matrix-matrix multiplication and memory layout on a rank (parallel process).

Project Mentor: Doc. Mgr. Michal Pitoňák, PhD.

Project Co-mentor: Ing. Marian Gall, PhD.

Site Co-ordinator: Mgr. Lukáš Demovič, PhD.

Participants: Muhammad Omer, Cem Oran

Learning Outcomes:

Student will learn about MPI and GASPI (C/C++ or Fortran), parallel filesystems (Lustre, BeeGFS/BeeOND) and basics of Apache Spark. He/she will also get familiar with ideas of efficient use of tensor-contractions and parallel I/O in machine learning algorithms.

Student Prerequisites (compulsory)

Basic knowledge of C/C++ or Fortran and MPI.

Student Prerequisites (desirable):

Advanced knowledge of C/C++ or Fortran and MPI. Basic knowledge of GASPI, Scala, Apache Spark, big data concepts, machine learning algorithms, BLAS libraries and other HPC tools.

Training Materials:

Workplan:

- Week 1: training;

- weeks 2-3: introduction to GASPI, Scala, Apache Spark (and MLlib) and efficient implementation of algorithms,

- weeks 4-7: implementation, optimization and extensive testing/benchmarking of the codes,

- week 8: report completion and presentation preparation

Final Product Description:

Expected project result is (C/C++ or Fortran) MPI and (runtime resilient) GASPI implementation of a selected, popular machine learning algorithm. Codes will be benchmarked and compared with the state-of-the-art implementations of the same algorithm in Apache Spark MLlib or other “traditional” big data / HDPA technology.

Adapting the Project: Increasing the Difficulty:

The choice of machine learning algorithm(s) to implement depends on the student’s skills and preferences. An ML algorithm implementation, to be efficient and run-time resilience, is challenging enough.

Adapting the Project: Decreasing the Difficulty:

Similar to “increasing difficulty”: we can choose one of simpler machine learning algorithms and /or sacrifice the requirement of runtime resilience.

Resources:

Student will have access to the necessary learning material, as well as to our local IBM P775 supercomputer and x86 infiniband clusters. The software stack we plan to use is open source.

Organisation:

Computing Center, Centre of Operations of the Slovak Academy of Sciences

Leave a Reply