Analysis of data management policies in HPC architectures

Project reference: 2101

Outstanding capabilities for computation are useless if data is not where it is needed in time. This is common knowledge in computer architecture, even more so now that systems are extremely parallel and memory bandwidth gets scarcer by the day. For this reason, correctly moving data throughout the memory hierarchy of the system is the essence to achieve the performance, and, more importantly, efficiency, to reach the Exascale.

To account for this, the MEEP Project, as part of the ACME HPC accelerator architecture, proposes a smart data movement and placement policies, which autonomously transfer the data among the different levels of the memory hierarchy. These will enable seamless access to data and avoid unnecessary stalls in the processor pipeline. This will lead to faster and more efficient computing, in which neither time nor energy are wasted waiting for the data that is needed, the access pattern of the applications is better leveraged and the available bandwidth is more efficiently used overall.

The selected candidate(s) will work with Coyote, a new in-house RISCV architecture simulator, in the research, analysis, implementation and experimentation for the new data management policies proposed in MEEP. These policies will be shaped by the behaviour of select workloads widely used in traditional HPC, such as DGEMM, SpMV or FFT among others, and also future HPC applications.

MEEP project Logo, where Coyote and this Summer HPC are contextualized:

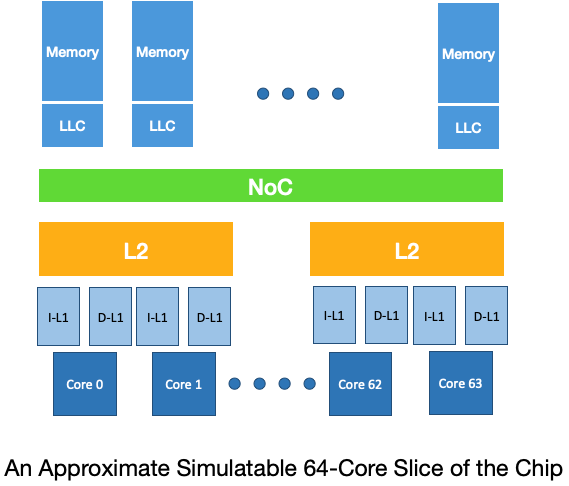

The ACME architecture to accelerate HPC applications:

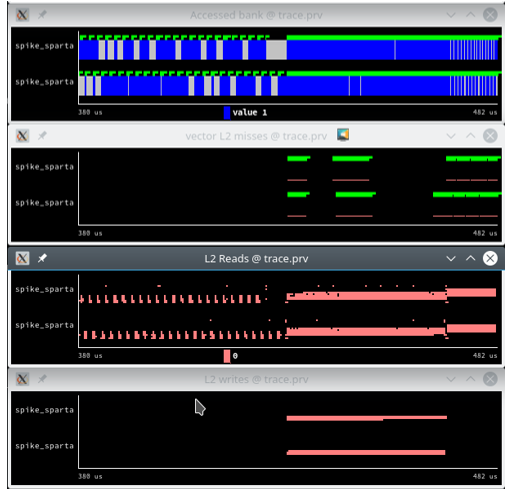

Coyote Simulator environment:

a) ACME model to be simulated

b) Behavioural visualizations for L2 memory cache

Project Mentor: Borja Pérez

Project Co-mentor: Teresa Cervero

Site Co-ordinator: Maria-Ribera Sancho and Carolina Olmopenate

Participants: Regina Mumbi Gachomba, Aneta Ivaničová

Learning Outcomes:

– A deep understanding of how different HPC workloads behave and how they stress the resources of the system.

– Expertise with simulators and their visualization tools, which are the first tool in the proposal of new designs for HPC architectures.

Student Prerequisites (compulsory):

– Object oriented programming

– Moderate understanding of computer architecture and how to program for its efficient use.

Student Prerequisites (desirable):

– C++

Training Materials:

– Paraver trace visualization tool: https://tools.bsc.es/paraver

– MEEP web Page: https://meep-project.eu/

– Coyote: https://github.com/borja-perez/Coyote

Workplan:

- Week 1: Training.

- Week 2: Familiarization with the proposed architecture and target workloads.

- Week 3: Familiarization with the simulation tools.

- Weeks 4-7: Analysis of data management policies and potential proposal of new ones.

- Week 8: Conclusions.

Final Product Description:

A comparison analysis of different data management policies for the evaluated HPC workloads, and the extraction of relevant conclusions on their adequacy for the HPC context.

Adapting the Project: Increasing the Difficulty:

It is possible to increase the complexity of the project by considering more features and low-level details to define the architectural blocks. The blocks that have to fulfil with the data management policies. The results of the analysis and the inputs of the candidates will be used to develop and implement new policies.

Adapting the Project: Decreasing the Difficulty:

Focus may be shifted to the visualization side of the analysis. This would also provide valuable learning for the candidate, such as the definition of metrics to evaluate HPC architectures, correct visualization to obtain insightful knowledge and the associated tools.

Resources:

The Coyote simulator is developed in BSC and open source. The required computing equipment and facilities will be provided by BSC.

*Online only in any case

Organisation:

BSC – Computer Science- European Exascale Accelerator

Leave a Reply