A brief overview of HiPerBorea and permaFoam

It has been 6 weeks in the Summer of HPC and I realized I had not written a blog post that gives an insight into what we are doing. In this blog post, I would like to talk about the project’s purpose.

What is HiPerBorea?

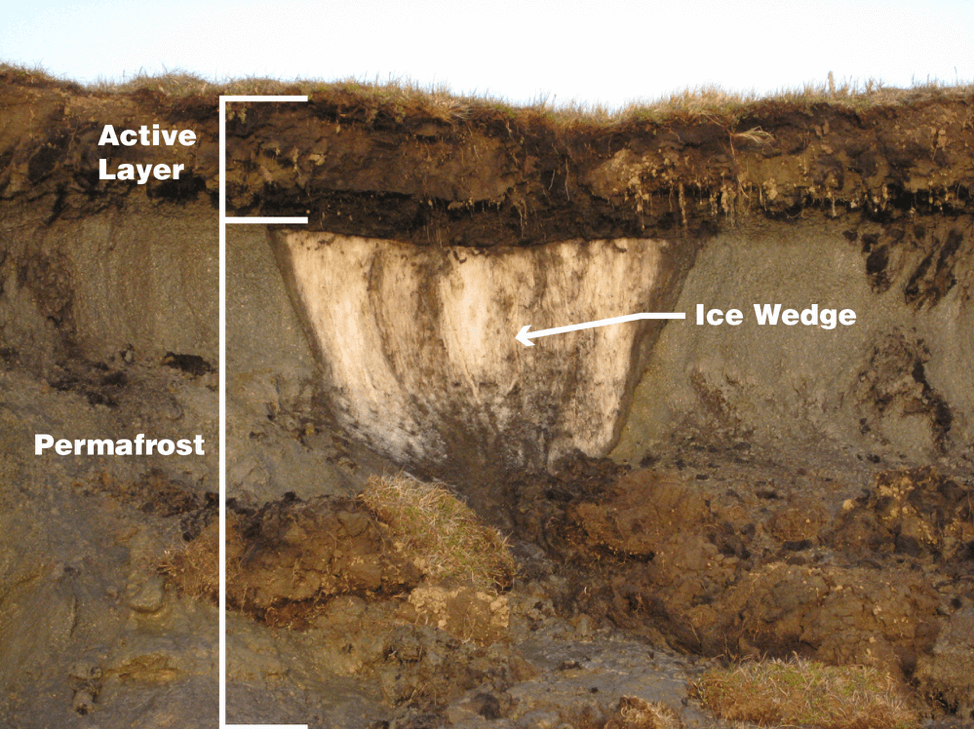

The project I am working on is titled HiPerBorea. The purpose of the project is to quantify the effect of global warming on permafrost areas, based on numerical studies at the watershed scale. You may ask, “What is permafrost?”. Permafrost is a thick subsurface layer of soil that remains below freezing point throughout the year, occurring chiefly in polar regions:

Unfortunately, due to climate change, these permafrosts are melting and causing severe impacts on our lives. For instance:

- Many northern villages are built on permafrost. When permafrost is frozen, it’s harder than concrete. However, thawing permafrost can destroy houses, roads, and other infrastructure.

- When permafrost is frozen, the plant material in the soil—called organic carbon—cannot decompose, or rot away. As permafrost thaws, microbes begin decomposing this material. This process releases greenhouse gases like carbon dioxide and methane into the atmosphere.

- When permafrost thaws, so do ancient bacteria and viruses in the ice and soil. These newly-unfrozen microbes could make humans and animals very sick.

(Credit kml.gina.alaska.edu)

What is permaFoam?

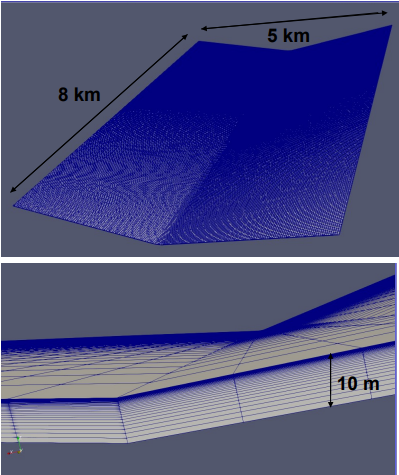

To quantify the effects of global warming, HPC tools and resources are needed. To satisfy this need, an open-source tool permaFoam, the OpenFOAM solver for permafrost modeling is built. This tool has been used intensively with 500 cores working in parallel for studying a permafrost-dominated watershed in Central Siberia and tested for computing capabilities up to 4000 cores. Due to the large scales to be dealt with in the HiPerBorea project, the use of permaFoam with at least tens of thousands of cores simultaneously is needed on one billion cells mesh.

We want to demonstrate that permaFoam has good parallel performance on big meshes which will be required to simulate actual geometries. To perform the scalability tests, we need to decompose the mesh into different processors. This operation is done by a utility in OpenFoam called decomposePar. However, it is working in serial and takes too much time and memory. Since we are dealing with more than 1 billion mesh, decomposing the mesh takes more than 1 week and more than 1TB of memory.

We are developing a tool that does the exact same thing as the decomposePar utility does but in parallel using the domain decomposition method. After the tool is built, we will do some scalability tests which my partner Stavros will share the results with you.

I hope I made our aim clear and that you learned something from this blog post.

Thank you for reading.

Leave a Reply