Assessment of the parallel performances of permaFoam up to the tens of thousands of cores and new architectures

Project reference: 2207

Permafrost modeling is important for climate change impacts assessment both in cold areas (especially intensive warming occurring there) and globally (massive quantities of frozen organic C stored in the near surface permafrost). It has also numerous applications in civil and environmental engineering (artificial ground freezing, infrastructure stabilities and water supply in cold regions, …). It requires High Performance Computing techniques because of the numerous non-linearities and couplings encountered in the underlying physics (thermo-hydrological transfers in soil with freeze/thaw). For instance, permaFoam, the OpenFOAM® (openfoam.com, openfoam.org) solver for permafrost modeling, has been used intensively with 500 cores working in parallel for studying a permafrost dominated watershed in Central Siberia, the Kulingdakan watershed (Orgogozo et al., Permafrost and Periglacial Processes 2019), and tested for parallel computing capabilities up to 4000 cores (e.g.: Orgogozo et al., InterPore 2020). Due to the large scales to be dealt with in the HiPerBorea project (3D modelling for tens of square kilometers, century time scale – hiperborea.omp.eu), it is anticipated that the use of permaFoam with at least tens of thousands of cores simultaneously will be needed on one billion cells mesh. This internship aims at characterizing the scalability behaviour of permaFoam in this range of number of parallel computing tasks, and giving a first insight in the code profile using appropriate profiler. A try on new architectures (Fujitsu-like processors) will also be performed. A 3D OpenFOAM® case which simulates the permafrost dynamics in the Kulingdakan watershed under current climatic conditions (Xavier et al., in prep) will be used as a test cases for this study. The used supercomputing infrastructures will be Occigen (CINES) and Irene-ROME (TGCC).

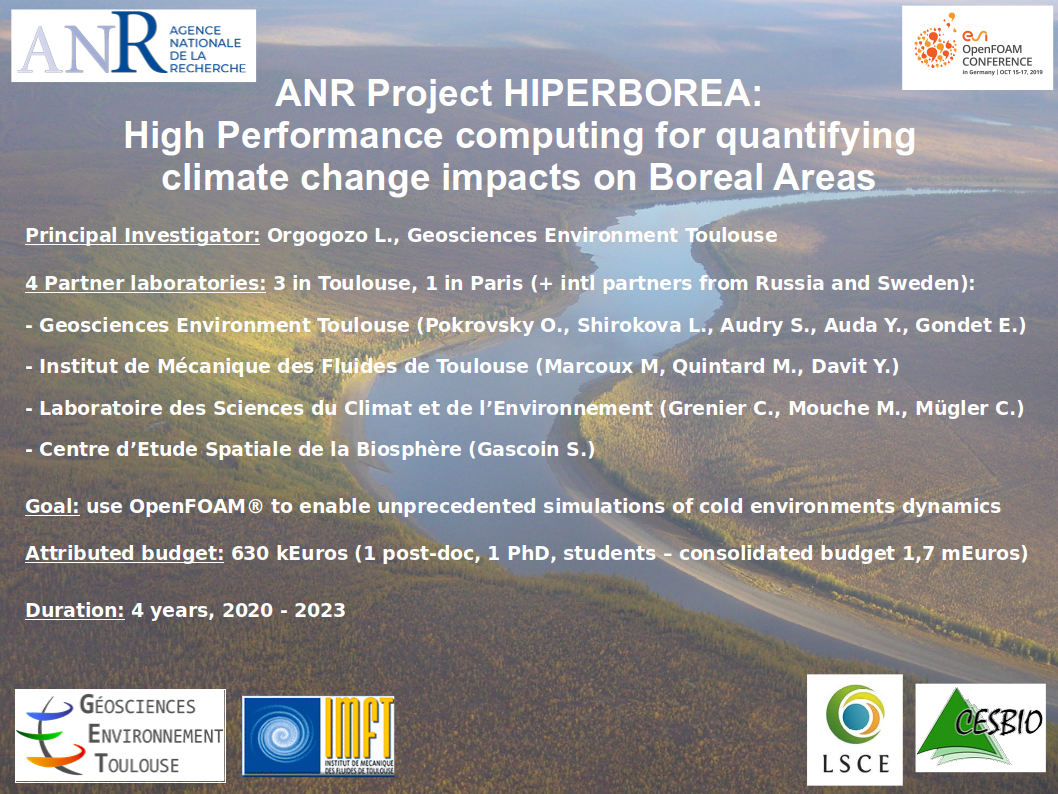

Overview of the HiPerBorea project as presented at the OpenFOAM® Conference held in Berlin in October 2019.

Pre-existing characterization of scalabilty of permaFoam on different supercomputers, different mesh size used.

Project Mentor: Orgogozo Laurent

Project Co-mentor: Xavier Thibault

Site Co-ordinator: Karim Hasnaoui

Learning Outcomes:

The first outcomes for the student will be to get used with the well known, open source, high performance computing friendly CFD toolbox OpenFOAM. The student will also get familiar with the basics of massively parallel computing on supercomputers of today.

Student Prerequisites (compulsory):

Programming skills, basic knowledge of LINUX environment, dynamism, and sense of initiative.

Student Prerequisites (desirable):

C/C++/Bash/Python programming skills.

First experience with OpenFOAM.

Interest for environmental issues and climate change.

Training Materials:

OpenFOAM: https://www.openfoam.com/

Paraview: https://www.paraview.org/

Project HiPerBorea website: https://hiperborea.omp.eu

Workplan:

Week 1: Training week

Week 2-3: Bibliography, OpenFOAM tutorials, first simulations on supercomputer.

Week 4-5: Design and perform scalability study, code profiling, performance analysis on different supercomputers

Week 6-7: Complete simulation on under research watershed, postprocessing and analysis

Week 8: Report completion and presentation preparation.

Final Product Description:

The main deliverable of the project will be a set of performance analysis with permaFoam for contrasted physical conditions and on different supercomputing systems (Occigen, Irene).

Adapting the Project: Increasing the Difficulty:

If the candidate is at ease in supercomputing environment, extension of the scalability study and code profiling can be performed.

Adapting the Project: Decreasing the Difficulty:

“Realistic” simulations planned for week 6-7 can be discarded to focus on computational topic.

Resources:

PermaFoam solver, accounts on the DARI project A0060110794 for Occigen (CINES) and Irene (TGCC), on both of which OpenFOAM is installed as a default computational tool, Input data to be delivered by L. Orgogozo and T. Xavier.

Organisation:

IDRIS, GET-Geosciences Environment Toulouse

Leave a Reply