Is SparkR really faster than R?

Hi again!

Welcome to my PRACE SOHPC blog#2.

So, before beginning, I want to remind you that my project involves the convergence of HPC and Big data, and more details can be found here. The project’s main aim is to explore the benefits of each system (i.e., Big data and HPC) in such a manner that both will benefit from each other. So, several frameworks are developed to meet this, and the most popular two of them are Hadoop and Spark, and for my use case, I have worked with Spark.

Case study (IMERG precipitation datasets)

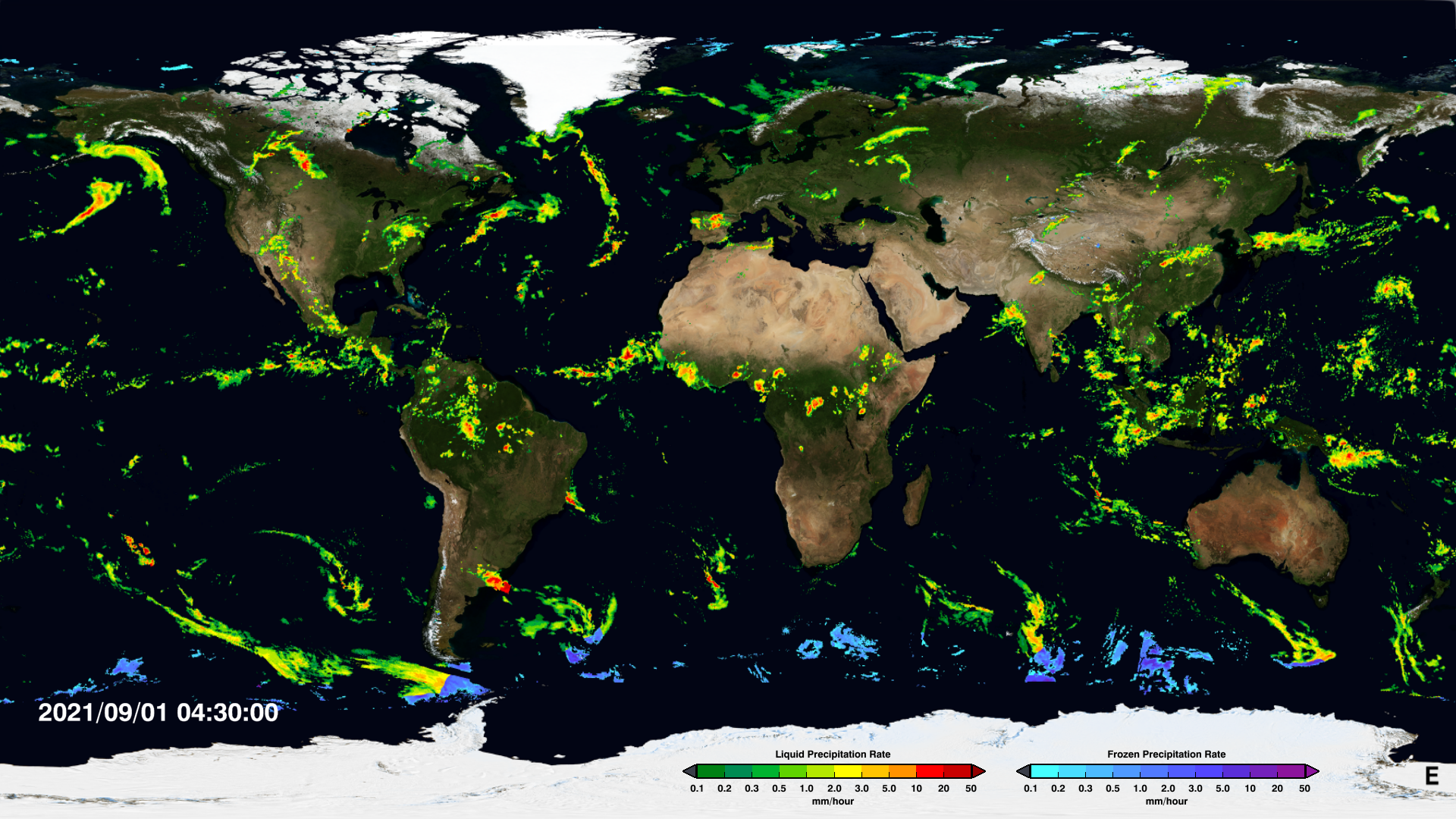

At the same, I have a case study from my field of interest, i.e., precipitation. The use case will deal with analyzing the Integrated Multi-Satellite Retrievals for GPM (IMERG), a satellite precipitation product from the Global Precipitation Measurement (GPM) mission by the National Aeronautics and Space Administration (NASA) and Japan Aerospace Exploration Agency (JAXA). The dataset comes with multiple temporal resolutions such as 0.5 hourly, daily, and monthly. Here, I have used the daily dataset from 2000 – 2020, which is approximately 230 GB (each daily data is around 30 MB). The main reason why I’m using this dataset is that I have faced several problems with working these large amounts of data, especially in R.

Although HPC is often equipped with enough memory to load and analyse the datasets, using R with HPC for big data analysis is not often the first choice. But, as I have my script in R and I’m very convenient with R, I just want to stick with R to analyse the datasets. Here, where Spark will be useful. Although Spark initially started with Scala, Java, and Python, it later introduced the R interface. So, to work with Spark using R, we have two main libraries. One is SparkR (developed by the spark core team), and another is SparklyR (developed by the R user community). Both libraries share some similar functions and will converge into one soon. So, we are using both libraries.

The bottleneck and result

The main bottleneck of dealing with Netcdf datasets is that the data is stored in multiple dimensions. Therefore, before starting any analysis, the datasets should be stored in a more user-friendly format such as an R data-frame or CSV (I prefer an .RDS format). The R script to convert Netcdf to an R data-frame was slow, which takes approximately 235 seconds for 30 files, each with 30MB on the Little Big Data Cluster (LBD), which has 18 nodes each with 48 cores. However, as the R script was designed for a single machine, it does not really use the benefits of multi-core clusters.

Therefore, the same R script was applied through the spark.lapply() function, which parallelizes and distribute the computations among the nodes and their cores. Initially, we started with a small sample (30 files), and the bench-mark (run for 5 times) results were shown in Table.1. Comparatively, SparkR has significantly faster than R. For instance, on average, R takes 235 seconds to complete the process (i.e., write an .RDS). In contrast, for SparkR (i.e., write into parquet format), it was just 5.58 seconds, which is approximately 42 times as much faster than R.

| Method | Minimum | Mean | Maximum |

| R | 231.17 | 235.34 | 239.57 |

| SparkR | 5.39 | 5.58 | 5.7 |

So, that’s it for blog#2!

Hope you enjoyed reading it and if you have any question, please comment I will happy to answer.

Bye..!

Leave a Reply