Nothing more equal than two parallel lines.

There is nothing more equal than two parallel lines- hence why we have two parallel lines as a sign of equality ‘=’. How is this related to programming? This particular fact isn’t, however we do use  the concept of ‘parallel’ to describe a whole branch of High Performance Computing (HPC): Parallel Programming. Traditionally software (programs) are written for serial execution. Meaning that a problem is broken into a discrete series of instructions. These instructions are executed sequentially one after another. We can consider a simple analogy. Imagine I start a small watchmaking company, which I may do if I fail at HPC, and I employ one person to be a fellow watchmaker. We optimize the amount of watches we produce by making two separate watches at any given time. Alternatively we might consider an assembly line where I’d produce the watches’ face while my co-worker prepares the strap. In HPC we refer to these methods of dividing work as work being done in parallel.

the concept of ‘parallel’ to describe a whole branch of High Performance Computing (HPC): Parallel Programming. Traditionally software (programs) are written for serial execution. Meaning that a problem is broken into a discrete series of instructions. These instructions are executed sequentially one after another. We can consider a simple analogy. Imagine I start a small watchmaking company, which I may do if I fail at HPC, and I employ one person to be a fellow watchmaker. We optimize the amount of watches we produce by making two separate watches at any given time. Alternatively we might consider an assembly line where I’d produce the watches’ face while my co-worker prepares the strap. In HPC we refer to these methods of dividing work as work being done in parallel.

When dual core processing chips first became commercially enviable they were marketed as enabling the user to be able to read emails and surf the web at the same time. While this is balderdash, since these tasks require little computing power, it does give us an idea of ‘dual core’ means. Essentially this is like me and my coworker making watches – two heads are better than one. However, as we shall discuss, for everyday use it is difficult to take advantage of two watchmakers (or processors as we shall refer to them in the program).

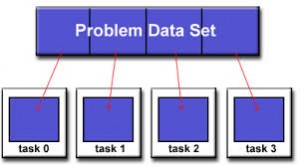

Here we have four processes who are each getting there own portion of the data set (which are the supplies and tools in our example).

In our watchmaking example it is easy to orchestrate this parallel work, but we can be sure that to complete a full batch of watches some sort of regular communication between myself and my co-worker will be required. In the assembly line example I will need to pass my co-worker my completed watch face so that he can attach the strap. Being very social entities, this is simple for humans, though a computer needs explicit instruction of when the watch face, or data, needs to be passed from me (process 1) to my co-worker (process 2). We can also consider that in the first example, where myself and my co-worker worked on separate watches entirely. We needed to divide the appropriate resources and tools before we started, since requiring them during the production process will force unscheduled communication- and interacting with co-workers is an effort we could all do without. From this we can start to see that communication between processes in a parallel program is our main obstacle.

To implement Parallel programming I am using MPI (Message Passing Interface) which is the de facto standard for implementing parallel programming in a distributed memory environment. I discussed in my previous post ‘A picture is worth a thousand thousand tiny numbers‘ that I am visualising the results of the ESPRESO, developed here, at IT4Innovations . This stands for ExaScale PaRallel FETI SOlver – a highly parallel program that can solve mathematical problems. Meaning that it can run on many cores. Each process solving a part of the problem. A supercomputer to a computer is like a massive factory operation with hundreds (or thousands!) of workers to my small watchmaking company- now we can see why parallel programming is essential for efficient use of supercomputers.

I promise my next post will be more fun and will follow very shortly, we all get to talk about work every now and then ;D

Hi, i have started paying particular attention to your posts. I am planning on doing HPC masters in the School of maths Trinity college. I think your blog is very interesting. I will be going through it and hopefully have fun interacting. Thank you very much.

Thanks a lot! Thrilled to hear that! Great to make new contact with other HPC enthusiasts, after all that’s what Summer of HPC is all about 🙂

Great post! Really understandable explanation of parallel computing.

Two parallel lines is nothing compared to five perpendicular red lines 🙂