One script to rule them all

As explained at the first post, my current project will make use of a supercomputer located at University of Luxembourg, called Aion which consists of 318 compute nodes, totaling 40704 compute cores, with a peak performance of about 1,70 PetaFLOP/s. This means it can 1.7 quadrillion arithmetic operations per second at its best!, but with that amount of computational resources one question arises, How could we take advantage of that amount of nodes?

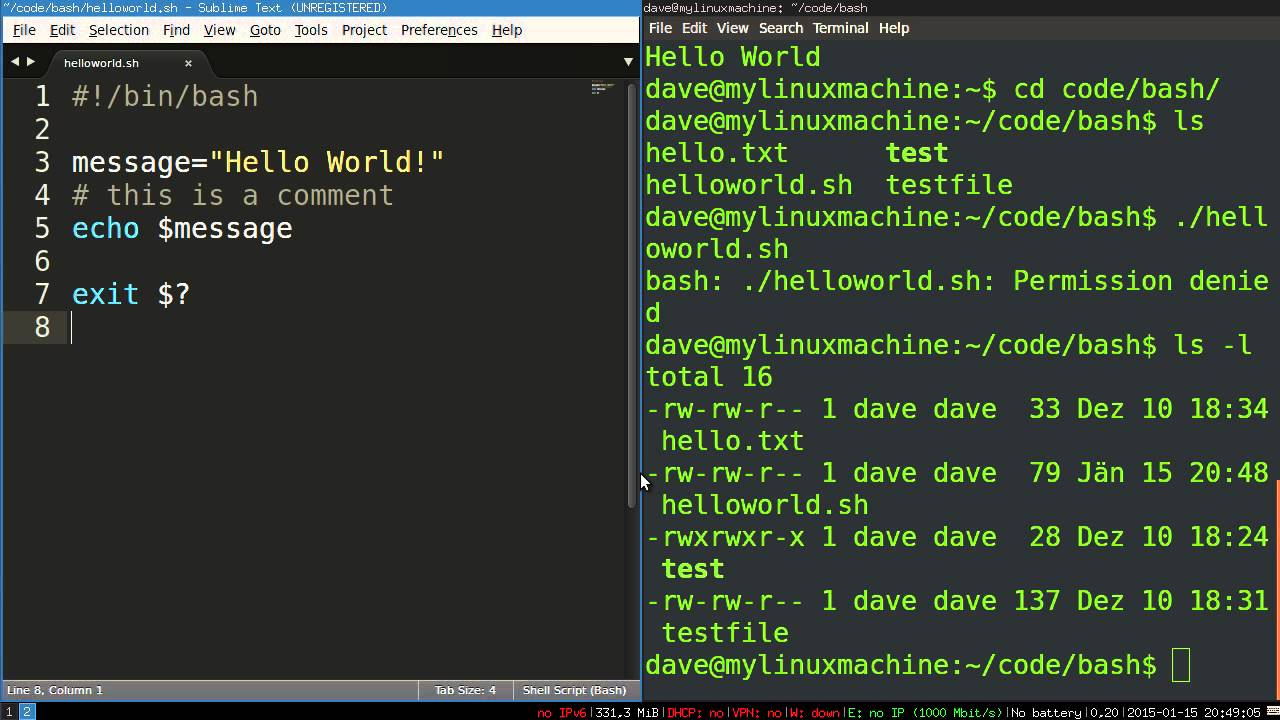

Bash.. An old friend

Before answering this question, let me introduce Bash, a command language which main task so to speak is to process the commands you give to it, from simple things as show “Hello world” on your terminal to complex scripts which manages several files. One example of usage is when you want to launch an specific executable, if you developed your own software you for sure want to test as soon as you finish it or in the meantime to see if it is working as intended, you can execute it in a bash script and make it suitable for your needs, do you want it to run thousand of time for different arguments or cases? No prob!, bash as well can manage for loops so you can automate this process.

One caveat for this is that only is planned to work for UNIX shells, the best known ones are MAC OS and LINUX, and guess what, more than 95% of supercomputers in the world uses LINUX ;).

Communication breakdown

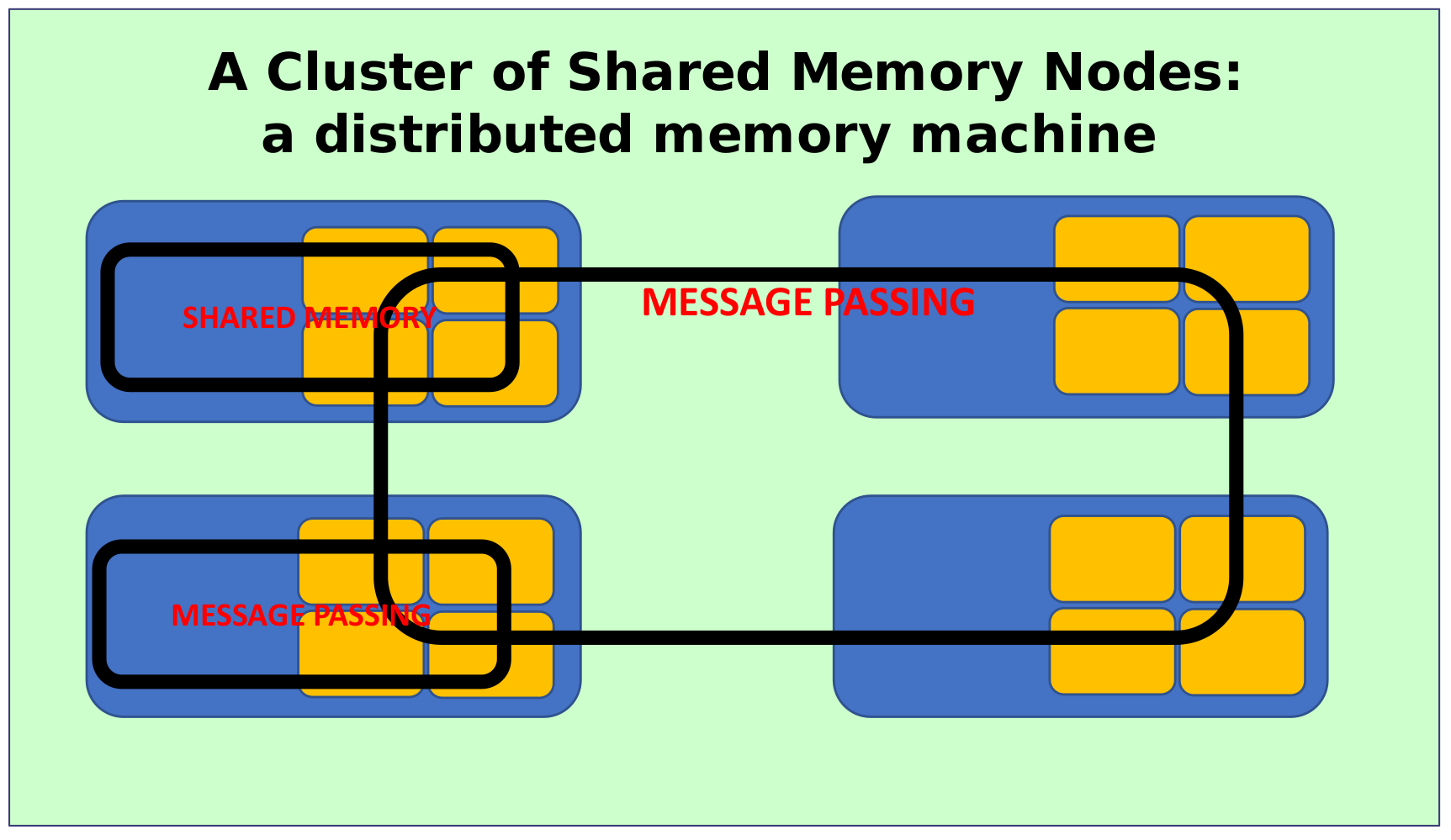

Alright now we have a tool that seems to do what we are looking for, but here is another caveat, each compute node we should consider as an independent resource and because of that if you want to make efficient use of every node available, they must communicate with each other in a smooth way!. Thankfully exists a network connection between every node called Fast InfiniBand (IB) HDR100 , you could send 100 Gigabit of data per second (theoretical peak) , almost like sending a FullHD movie per second to a neighbour node , not bad at all.

But we are not done yet, we still require a framework to manage the communication between compute nodes, and this is when MPI comes to play, a communication protocol framework available for different programming languages like C++ or FORTRAN, MPI sets how the data is structured , which message passing policy will be used, practically takes care of the communication back and forward between these nodes. Very useful as we said at the beggining that each node is independent, there is no shared memory between them , so there is no way that one node could access another node memory unless you use MPI.

Now let’s automate .. right?

Do we miss something at this point? Seems like we have all the required tools to start but… normally a supercomputer is a shared resource between several departments and scientists and because of that, exists certain policies, priorites or amount of restricted resources available for each user. This supercomputer must contain a software that acts like a orchestra conductor, organizing the submitted jobs taking into account the priorities and policies, then scheduling the resources for everyone, in this case Aion uses a software called SLURM which will not let this supercomputer fall into anarchy :).

Now we know everything that is required to implement an automation for a performance analysis my friends, I am really eager to show you the final results for the next post, so stay tuned.

Leave a Reply