Visualization data pipeline in PyCOMPSs/COMPSs

Project reference: 1601

COMPSs is a programming model and runtime that aims at parallelizing sequential applications written in sequential programming languages (Java, Python, C/C++). The paradigm used to parallelize is the data-dependence tasks: the application developer selects the parts of the code (methods, functions) that will become tasks and indicates the directionality of the arguments of the tasks. With this information, the COMPSs runtime builds a data-dependence task graph, where the concurrenty of the applications at task level is inherent. The tasks are then executed in distributed resources (nodes in a cluster or a cloud). The runtime implement other features such as data transfer between nodes or elasticity in the cloud.

COMPSs has recently being extended to support Python codes, through its Python binding (PyCOMPSs) and it is being integrated with new strategies to store data (Hecuba with Cassandra DB, and dataClay a persistent objects library).

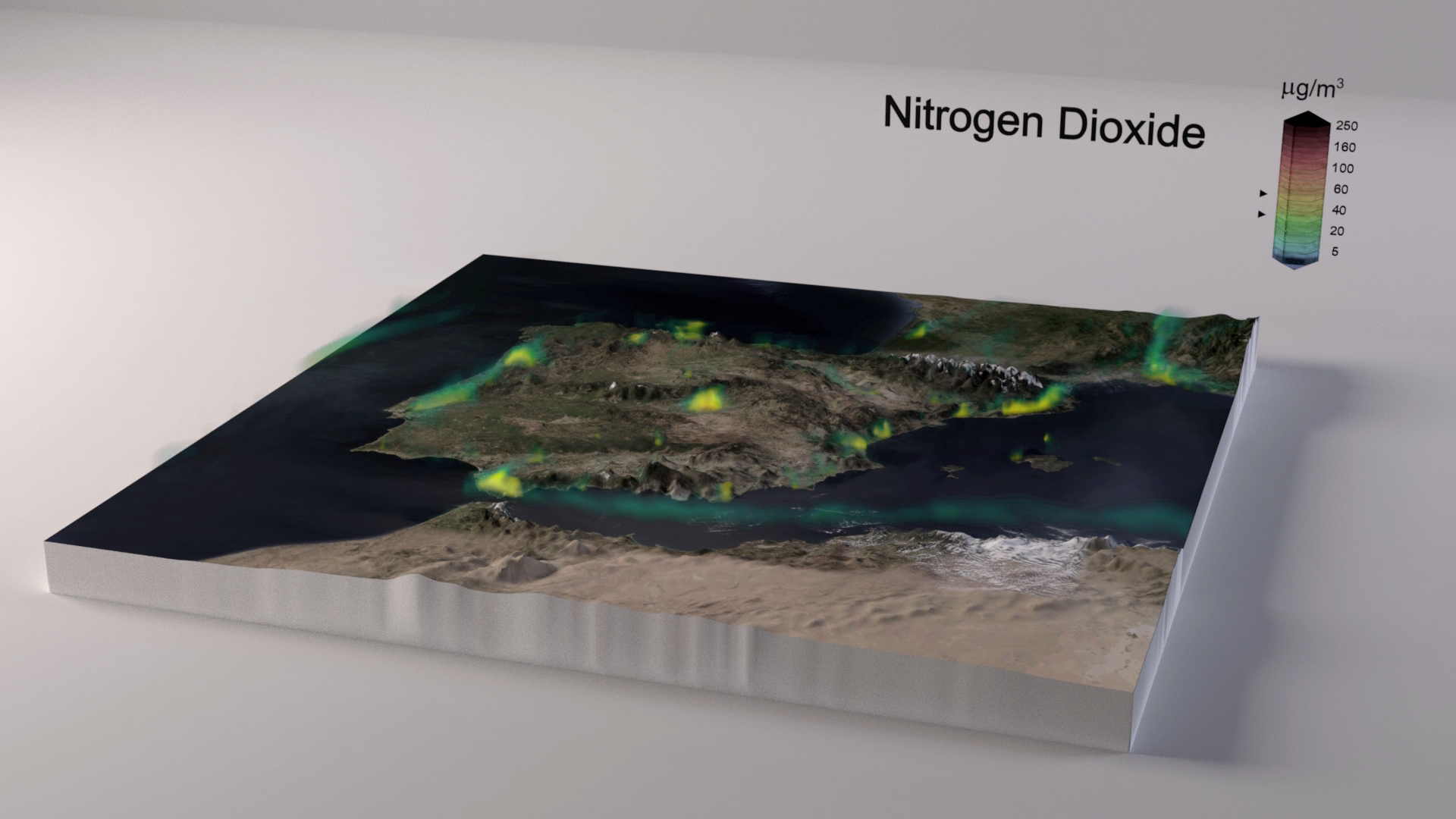

The objective of this SoHPC proposal will be to develop a parallel pipeline for processing large data sets for visualization purposes. In a typical visualization project we need to process and convert large volumes of data into intermediate formats for analysis and processing, and later on into formats specific for imaging software (2 or 3 dimensional meshes, particle, and volumetric information). In this project we aim to develop pipelines for the interpretation and visualization of climate and polution data, and another for material science data (electronic densities and atomic positions of simulations of new materials). The pipelines will be developed in Python using PyCOMPSs/COMPSs, and the final formats will be Maya and Blender.

The intern will need to learn the specifics of data formats associated to these research projects, learn the existing conversion routines and develop new (parallel) ones, and interact with the artist/animators to create a final movie and imagery from the data.

The executions could be done in the MareNostrum supercomputer and in the Workflows and Distributed Computing group private cloud, and the sample datasets will come from the Earth Sciences research department at BSC and the European Center of Excellence for Novel Materials Discovery.

Project Mentor: Fernando Cucchietti

Site Co-ordinator: Maria Ribera Sancho

Student: Sofia Kypraiou

Learning Outcomes:

The student will learn to program applications following PyCOMPSs/COMPSs programming model. The student will learn how to execute applications that run in parallel in clusters and clouds. The student will learn big data processing and conversion tools.

Student Prerequisites (compulsory):

Advanced programming in Python.

Expertise in the area of application used in the project (if any provided by the intern).

Student Prerequisites (desirable):

Knowledge of imaging software like Blender or Maya.

Training Materials:

There is a tutorial material available in the COMPSs website:

http://www.bsc.es/computer-sciences/grid-computing/comp-superscalar/downloads-and-documentation

Workplan:

Week 1/: Training week

Week 2/: Literature Review Preliminary Report (Plan writing)

Week 3 – 7/: Project Development

Week8/: Final Report write-up

Final Product Description:

The final product will be a parallel data processing pipeline that can be executed in supercomputers. The visual part will be videos and static images rendered from the sample data used in the project.

Adapting the Project: Increasing the Difficulty:

The project is on the appropriate cognitive level, taking into account the timeframe and the need to submit final working product and 2 reports.

Resources:

The student will need access to standard computing resources (laptop, internet connection) as well as an account in Marenostrum.

Organisation:

Barcelona Supercomputing Centre

[…] 1. Visualization data pipeline in PyCOMPSs/COMPSs […]