Is your code malleable?

Grey cast iron, white cast iron, ductile iron, malleable iron,……. Oh my gosh, so many types of cast iron! What is the difference? This was the question which always used to annoy me when I was graduating as a mechanical engineer. Well, the differences are in the chemical composition and the physical properties. All of the cast iron types tend to be brittle but there is one of them which is malleable. Yeah you have guessed it right, it is the malleable iron which has the ability to flex without breaking. Malleability is a common term used in material science and manufacturing industry and is defined as a material’s ability to deform under pressure. But can a HPC application be malleable too? This is the question I am tackling within my project in the PRACE Summer of HPC programme.

Polycrystalline structure of malleable iron at 100x magnification (Source: Wikipedia)

I had no idea that the term “malleability” is also being used in the HPC jargon until I started working on this project. Soon I came to know that like malleable materials, codes running on supercomputers can also be dynamically hammered into whatever size and shape we want. Many times, we have a lot of jobs submitted to the supercomputer, but because they can’t fit into the available compute nodes, they keep on waiting in the queue for long time. This reduces the throughput of the cluster and also the users have to wait longer to get the results. But if the already running jobs can be resized dynamically, then they can allow other incoming jobs to fit in the cluster and expand or shrink according to the available resources. More throughput and lower turn-around time can reduce the cost of the HPC system. Isn’t it amazing? To look into this problem, let’s have a glimpse on the basic categories of jobs according to their resize capability.

Five categories of jobs

- Rigid: A rigid job can’t be resized at all after its submission. The number of processes can only be specified by the user before its submission. It will not execute with fewer processors and will not make use of any additional processors. Most of the job types are rigid ones.

- Moldable: These are more flexible. The number of processes is set at the beginning of execution by the job scheduler (for example, Slurm), and the job initially configures itself to adapt to this number. After it begins execution, the job cannot be reconfigured. It has already conformed to the mold.

- Evolving: An evolving job is one that can dynamically request its resource requirements during its runtime. The job scheduler then checks whether the requested resources are available or not and allocates or deallocates the nodes according to the request.

- Malleable: Now comes the fourth type, the jobs which are malleable. These can adapt to changes in the number of processes during their execution. Note that, in this case, it is the job scheduler that takes the decision to resize the jobs in order to maximize the throughput of the cluster.

- Adaptive: This kind of job is the most flexible one. The application can itself take decisions whether to expand or shrink, or it can be hammered by the job scheduler according to the status of the available resources and queued jobs.

Malleability seems conceptually very good, but whether this scheme works in the actual scenario? To answer this question, I needed a production code to test it. So my mentor decided to use LAMMPS, to test malleability. LAMMPS stands for Large-scale Atomic/Molecular Massively Parallel Simulator and is being maintained by Sandia National Laboratories. It is a classic molecular dynamics code which is used throughout the scientific community.

A dynamic reconfiguration system relies on two main components: (1) a parallel runtime and (2) a resource management system (RMS) capable of reallocating the resources assigned to a job. In my project I am using Slurm Workload Manager as RMS, which is open-source, portable and highly scalable. Although, malleability can be implemented by only using RMS talking to the MPI application and using MPI spawning functionality, but it requires considerable effort to manage the whole set of data transfers among processes in different communicators. So, it was better to use a library called DMR API [1] which combines MPI, OmpSs and Slurm which substantially enhanced my productivity in converting the LAMMPS code into malleable, thanks to my mentor for developing such a wonderful library.

The most time-consuming part of my project was to understand how LAMMPS work. It has tens of thousands of lines of code with a good online documentation. But once understood, it was not very difficult to implement malleability. In fact, it only needed few hundred lines of extra code using DMR API.

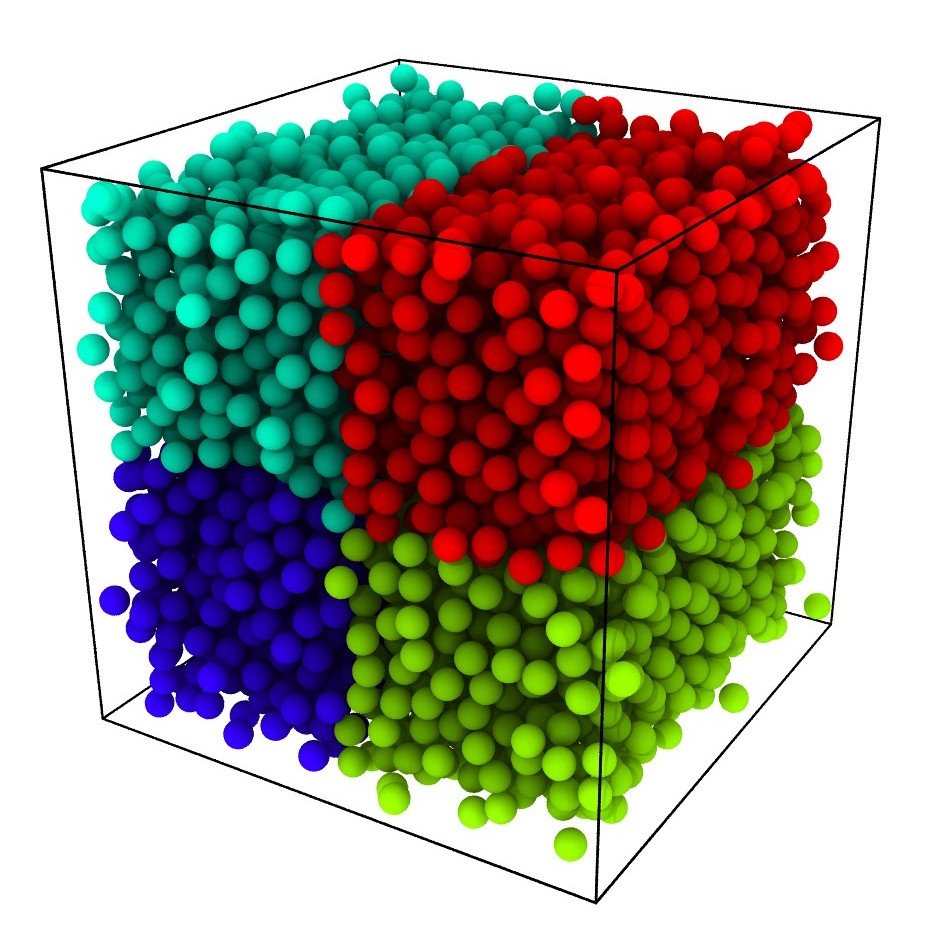

I can show you a basic rendering of malleable LAMMPS output. The color represents the processor on which the particles reside. The job was set to expand dynamically after every 50 iterations of verlet integration run. The force model was set to Lennard Jones and rendering was done using Ovito.

I am now in the end phase of my project, testing the code and getting some useful results. In the next blog post, I will show some performance charts of malleability with LAMMPS. I leave you here with a question and I want you think about your HPC application – Is your code malleable?

References:

[1] Sergio Iserte, Rafael Mayo, Enrique S. Quintana-Ortí, Vicenç Beltran , Antonio J. Peña, DMR API: Improving cluster productivity by turning applications into malleable, Elsevier 2018.

[2] D.G. Feitelson, L. Rudolph, Toward convergence in job schedulers for parallel supercomputers, in: Job Scheduling Strategies for Parallel Processing, 1162/1996, 1996, pp. 1–26.