Assembling genome, one nucleotide at a time

It has been a bit more than a month since my project began. Initially, the learning curve was rather steep as the project I am working on, ABySS, a bioinformatics software for assembling DNA sequences is complex and consists of many intertwined parts and processing stages. Still, as I got to explore its workings, at this point, there’s measureable progress.

Assembling genome is a long process and involves manipulating large amounts of DNA data, especially if the genome is long, as is the case with humans. As such, performance is of great importance, as you might be assembling the DNA multiple times (or of various species) and you only have so much time to do that. These jobs can last days, so even a seemingly unimportant performance boost can save you a day or two.

My first step was to determine the bottlenecks, parts of the code whose execution time directly impacts the execution time of the whole program. To quicken the process, I have contacted the original program creators at Canada’s Michael Smith Genome Sciences Centre. They were happy to quickly respond and point out possible improvements, as well as what I can investigate more into.

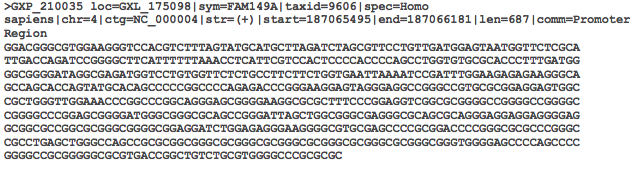

My first task was to improve an in-house DNA file reader. DNA is stored in FASTA or FASTQ file format, which is a text format holding nucleobase strings (A, C, T, G), along with optional DNA read quality, in FASTQ files. The main problem with the existing reader was that buffering was not done right and the reader was making more reads than necessary, and file reads are slow. What you ideally want to do is read files in big chunks and then process that in-memory. There is a library that does this for FASTA/FASTQ files called kseq, so my goal here was to integrate it with the existing reader. The integration was done in such a way that the original implementation persists, but the program will prefer to use the new library if possible (based on file content).

A FASTA file content.

After benchmarking the new file reader, I have found that it was twice as fast as the old one, so it was a success. However, that was only performance of reading single files without any processing whatsoever. Upon testing the whole program on real data, I have found that the performance improvement isn’t as much (up to 10% difference in wallclock time), but that was to be expected, as reading files is only a small part of the whole pipeline.

After this, I have moved on to see if I can make more significant speedups. Turns out, after profiling the execution, there is a particular data structure that slows down the process a lot. So called Bloom Filters, which are a probabilistic data structures for testing whether data exists in a set have to be constructed from DNA data. These filters are built directly from FASTA/FASTQ files and basically tell whether a particular DNA sequence exists in a set. As these filters are large, their build process is multithreaded in order to speed it up, but to make sure this process works correctly, each thread has to exclusively lock the part of the data structure it’s building, in order to prevent other threads from meddling. This causes contention between threads and a massive slow down, as threads have to wait one another to access different parts.

The solution? Lockless filters. Get rid of locks altogether (almost). Instead of having threads fight one another for access rights, what I have done is give each thread its own copy of the bloom filter to build and then merge them in the end. The speedup was very noticeable, and the process lasts half of what it did before. There is a catch, however, I have realized only later. The size of these filters varies from 500MB up to 40GB, and is a configurable parameter. If you are dealing with filters of size 40GB on a 128GB RAM node (which is the case at Salomon), you can not have more than 3 copies of the filter at a time. But it is possible to alleviate this problem, as you can specify how many threads should have their private copies of the lockless filter and the rest of the threads use a single filter with locks. This way, the more memory you have, the better the speed up, with a copy of the filter for every thread in ideal case with sufficient memory.

While I was doing all this work, I received an email from my mentor – I was to give a presentation about my progress to him and maybe a few other people. Sure, why not, this would be a good opportunity to sum it up for me, as well. Few days later, when I was about to present, I realized it was more than a few people! Most of the seats were filled and the IT4I Supercomputing Services director, Branislav Jansík himself came. The presentation lasted for about an hour and most of the people present had several questions, which was pretty cool, as they managed to understand the presentation, even though not all of them were in technical positions.

Giving a presentation on my work so far.

The presentation was a very useful experience though, as it was, in a way, practice for the final report. It was far from what a final report should look like in terms of quality, but thankfully, I have received a lot of feedback from my mentor that will help me get the final presentation right. So big thanks to Martin Mokrejš, who also took the photo above!

Cheers