Cluster Scale GPGPU with OpenCL

Previous posts about climate modeling and CESM were required as this post is the crème de la crème of CESM from my point of view.

Cluster scale GPGPU seems to be very nice, on paper, but as soon as we start discussing some real world problems, things become much more complex.

CESM was the test case for considering larger scale usage of GPU hardware througout our project.

In this post, I would like to present some of the issues that arise when GPU resources are to be used and shared between different processes on the same node in different execution models.

A few important things to notice (for the anxious and impatient):

- Don’t expect any template/recipe answers. The best method to solve these problems depends on the application itself and requires software engineering consideration.

- The same problems exist for NVIDIA CUDA as well.

- To employ GPUs effectively in cluster environments it is necessary to design the software architecture appropriately in advance.

GPU and CPU from a Cluster Perspective

Let’s start with a short introduction of terms and concepts that will guide us through (making it short).

CPUs are composed of multiple cores, and these in turn are numbered in two different ways:

- Physical hardware identifier (running ID) by the OS (Look at /proc/cpuinfo for example)

- Abstract number when using MPI or OpenMP

Therefore, when running a distributed/parallel application on multi-core systems or clusters, we don’t really know from the runtime library (MPI or OpenMP) what is the specific physical core or CPU we are using. In fact, this number is also not reproducible – on a different run, the same number can be related to a difference processor/core!

Another issue is regarding memory share between the cores. As most systems are SMP in a node, and cluster scale memory sharing is available, memory addresses can be globally visible (to some extent) by all cores in the cluster.

With GPUs, the concept is different. Existing APIs allow us to enumerate available devices based on their ID, which is closely related to the physical device identifier. Saying “Device 0“, will mean the same device over and over, even between different processes. This number is not guaranteed to address the same device after reboot, but in fact, we’re less worried about that.

Each GPU device in the discrete world (cards attached to server racks) have their own memory, which is limited, visible only to them and maybe by processes running on the same hosting node (some interconnects allow more global sharing, but it’s beyond the current scope).

Problems / Questions

So, considering these details we run into a resource allocation problem, when using a distributed model like MPI with GPUs. MPI processes running on the same node are not aware of each other (Some ideas or measures will be described later), so resource allocation becomes an issue.

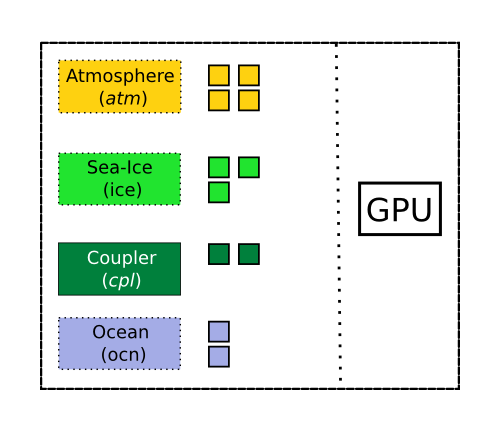

Multiple CESM processes are running in parallel on a node with only 1 GPU.

- How to allocate GPU in a node for different processes? We have 2 GPUs (ID 0 & 1), but MPI processes with ranks (32, 33 or 35)

- What happens when the number of GPUs is lower than running MPI processes? (1 GPU, but 4 processes or 2:6 ratio)

- How to synchronize between MPI processes on a node? (without locking the entire cluster through a communicator for each GPU access)

- What happens with memory allocation? If two processes allocate 4GB each on GPU memory one would fail with OUT_OF_MEMORY error, there is no virtualization available… Contrast to CPU with paging.

- Same can happen in a software with many components, unaware of each other (like CESM).

- What happens when processes of different jobs are running on the same node and need access to GPU resources?

Some Answers & Discussion

At this stage you should understand how vital and complex the questions above are. Might even be presented with increasing complexity.

For the last question, the answer would probably rely on the API we are given or some system-wide manager to use, since it’s a very complex task to perform and not that solved until today (I’m a good user, what about the others?).

One way of simplifying the problem would be to say: OK, we know our resources ahead of time, let’s allocate the resources when submitting the distributed job. This in general is fixed to an environment (a specific cluster), but cannot handle dynamic situations, or when environments can change. Such case is with CESM, which needs to run on variety of clusters and different hardware offering. Although still not trivial, it is less interesting to address.

We can also say: “OK, the cluster is ours and we are the only users”. Generally this is not the case and for those who can say it these problems are of less interest.

Synchronization

One issue was to synchronize between the processes on a system. Sometimes, for a single job, there is no real need to use MPI for splitting work on different cores, but submit one MPI process for each node, which in turn spawn other threads using OpenMP for joint computation.

With OpenMP we are given synchronization constructs for free inside a process so one major problem was solved (we are left with others).

It can (should) be noted that such a model would suggest submitting a job with allocation of a full node per process, otherwise, other jobs running in parallel will interfere and the model will fail.

When it comes to different MPI processes, we can use collective communications to identify which processes share the same node and create MPI constructs to communicate within that group (for barriers etc.). This will require further communication with the master/root process, but cannot be avoided in such case.

Consider the following example, where each process sends the root process a message with the name of the node it is running on:

char node_name[MPI_MAX_PROCESSOR_NAME] = { 0 };

int length = 0;

MPI_Get_processor_name(node_name, &length);

// Proceed with sending message and root work.

The root can process all messages and count how many processes are within each node, then send this value and allow those processes further create group constructs to synchronize with, based on specified scheme.

Some would like to rely on the way MPI implementation (e.g. OpenMPI, MPICH2) numbers different processes. Usually it can follow a predefined scheme (like processes on the same node always follow consecutive ordering, or odd/even partition), but it’s not a good idea to do so, as some nodes might differ from others (different amount of cores) and it requires full allocation of a node to work properly.

Final but not last, an implementation can decide of a synchronization construct to be used on a node basis for mutex operations, like locking a common in-memory file/pipe or so, methods which are quite native for Unix/Windows systems for IPC, but have their own limitations.

CESM and Simple Approach

The post is getting long, so I decided to leave some for the future as we also get more insight.

Since in CESM, all components share the same library and code base, it is possible to integrate a common synchronization construct for GPU support quite easily.

To simplify our lives and early development cycle, the decision was to use an isolated/singular execution model, where each computation using the GPU is standalone.

When having access to a GPU resource the scheme will be as follows: allocate memory, compute, copy results back, deallocate memory. The assumption (and guarantee) is that the computation is long enough to hide any of the overhead incurred by allocation/deallocation.

It allows us to test GPU performance for specific kernels and isolate all other issues that might come up in such complex systems.

More will follow…