Data preprocessing: challenges and mitigations

Last weekend I finally went to Prague! Which was about time because I’m living in the Czech Republic. Apart from being one of the cheapest European capitals I’ve been to there are so many beautiful things to do, like visiting the astonishing castle or going on a relaxing walk along Charles bridge. It also happened to be Pride week during my stay, where everyone was remarkably friendly. Walking by the parade was a great way to tour around the city at a time where it was fuller of life than ever.

But let’s dive into the topic of this blog: the quest for databases and the data preprocessing adventure. I’ll take it right where I left it: Action Units (those encoders of muscle group movements that I explained in the last post) are a relatively common topic of study. A lot of institutions have attempted to come up with solid datasets in which professional encoders annotate the present action units frame by frame in videos.

The facial expression datasets out there are pretty much split into two big categories: the ones in which subjects, usually actors, reenact combinations of action units at the command of an investigator, and the ones in which subjects are shown different stimuli in hopes that they produce facial expressions that include the action units that want to be studied.

As I explained in the last post, the goal of my project is to be able to understand facial expressions in normal conversations. The way I was going to do this was through understanding the action units present in a face and then inferring the expression and thus the emotion from them. Consequently, it was ideal for me to use the datasets in which facial expressions were elicited through stimuli, as these expressions were closer to the ones that would show in real conversations. This kind of dataset resembles my target problem the most, so it theoretically will help my model perform the best in real-life situations.

Flash forward to the point in time that I got access to a few terabytes of spontaneously elicited facial expression datasets (which required quite a lot of bureaucracy as most of these datasets are not publicly accessible). But yeah, now it’s the time to preprocess that data in a way that is consistent across the different datasets. The goal: to end up with a unified, robust collection of facial expressions with their annotated action units.

The first step in the preprocessing of this data was to crop out the faces from the video frames –as the models I’m going to be doing transfer learning from are trained on cropped faces, and don’t include the face detection part of the problem in them. An interesting anecdote here, In order to crop the faces from pictures I found Python OpenCV’s Haar Cascade face detector to be the best option (considering recall, accuracy and speed). However, I noticed that after preprocessing the whole dataset, a bunch of cropped faces were missing. And these faces were all from a small subset of the subjects. This got me wondering until I visually inspected the images and found that all these subjects had one thing in common: they were not caucasian. Sadly, this kind of bias is very common between modern algorithms as they are usually developed and tested on predominantly caucasian datasets. Anyhow, I ended up finding an alternative –more racially inclusive – version of the default algorithm, again provided by OpenCV.

Another issue I faced was the unification of the action unit encodings across the different datasets. Labelling the active action units is not a trivial job like it can be labelling the brand of a car model or the presence of a face in a picture. Sometimes facial expressions can be very complex and action units can interfere with each other. Besides, it takes professional annotators to label these perfectly.

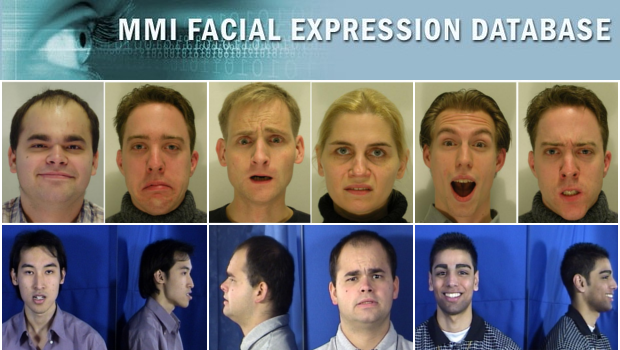

I used four main datasets: BP4D-Spontaneous (a high-resolution spontaneous 3D and 2D dynamic facial expression database), from which I only used the 2D data, DISFA (the Denver Intensity of Spontaneous Facial Action Database), the Cohn-Kanade AU-Coded Expression Database and the MMI facial expression dataset.

BP4D was by far the dataset. In order to get rid of the individual differences of the AU annotations across the datasets, I decided to bundle similar AUs together. I bundled those that described a similar muscle movement and weren’t use to determine the presence of dramatically different emotions.

Finally, another big issue I faced during the preprocessing of the dataset was the unbalanced nature of it. As these datasets contained spontaneous emotions, the frequency at which each action units was present was extremely variant. Action units related to common expressions like those involved in smiling or frowning were much more popular than those related to disgust or fear -as these emotions were more rarely elicited. One could argue that this distribution is likely to match the distribution of the AUs present on a real conversation, making common action units be detected with very high accuracy and rare AUs at a low one a good thing. However, this is a very undesirable behaviour, as in social interactions the uncommon facial expressions are more crucial to be understood as they encode a behaviour that is out of the norm, and so it’s more important to be aware of.

To mitigate this issue, I followed a bunch of different approaches. The first one that I tried, and the most trivial was removing instances of the most popular classes. But to be honest, this is not as trivial as it seems when the problem at hand is a multi-label problem (in which each image can have multiple labels). The issue is that when we remove an image that contains a very popular label, we are also removing other labels that might not be as popular. I used some heuristics and greedy algorithms to find an optimal set of images to be removed to reduce the popular label frequencies while preserving the unpopular ones. Something along the lines of removing the images with the highest ratio of popular to unpopular labels.

I will go in further detail about the unbalance in the next post as I explain how this affects the training process and the accuracy of the model overall.

In summary, data preprocessing can be a very long process, especially when the amounts of data to be handled are big. However, if the problems faced are tackled little by little, it’s worth spending the time because you can’t train a quality network without quality data to start with. So stick around for the next post detailing how I used the data I just preprocessed to train the neural network: how I explored the different hyperparameters, the different network topologies, the metrics I used and how they affected the performance of the module.

Leave a Reply