Flatpacking the data processing system. Innovative IKEA “FåKopp kaffe” system – a case study

After two months of intensive work here in the heart of Scottish pride plains, a time has come to conclude the results and verify the initial expectations.

My project was developed as a part of a data processing framework for Environmental Monitoring Baseline (EMB). The idea is simple: there are plethora of sensors around the UK that measure various environmental indicators such as water ground levels, water pH or seismicity. These datasets are publicly available on the website of British Geological Survey.

In terms of data analysis we are far past manual supervision therefore in addition to the proper dataset labelling and management, there is a need for a robust processing framework that also aids valid data acquisition. The task is fairly simple when we can identify our key needs but this entry stage of targeting the crucial aspects of our future system should not to be underestimated as it tends to have a greater impact the more advanced the work on the system is. Fortunately, environmental sensors, being a part of the IoT world, exhibit some particular characteristics that more or less translate to the nature of the data that will be consumed by our processing framework. Firstly, it can be expected that the data stream will be quite intensive and in the first approximation, continuous – when multiple sensors report every several minutes, the aggregated data flow rate becomes a significant issue. Furthermore, the we need the analysis to happen in the real-time. The third requirement of the system would be that the data might be of use later, therefore it needs to be stored persistently. At last, we need to run our system on HPC because it is Summer of HPC, that’s why. But seriously, systems like these require a reasonably powerful machine to run.

Soa either by extensive search on the web, or by experience, we can relatively quickly find the necessary tools to match our requirements. Whereas we might not be able to pinpoint precisely every aspect, there is no need to worry because we will take advantage of a software engineering paradigm called modularity. In modularity approach we want our software components separated and grouped by functionality, so that we can replace one, that does not exactly fit our needs with a more suitable one later in the project’s timeline. It is very much like creating our tiny Lego® bricks, then grouping and finally putting them together to form a desired shape.

To conclude the above reflections on how important our initial problem identification is, let’s dig into the software-requirement matching phase. All software components are open-sources, with majority of them being released on the Apache license.

Combining the first requirement with the third one, we have a demand for the high-velocity, high throughput persistent storage database. To satisfy this, we can use the Apache Cassandra database which is a column-wide no SQL, distributed database that supports fast write and read, while maintaining well-defined, consistent behaviour. So we can safely retrieve data while we are simultaneously writing to the database. Real-time analysis for HPCs can be managed by using large-scale data processing called Apache Spark that supports convenient database-like data handling. Additionally, Spark code being written in a functional style, naturally supports parallel execution and optimizes for minimal memory write/read – that is especially visible when we use Scala programming language to code in Spark. In order to make our processing architecture HPC ready, reproducible and easy to set up we will containerize our software components. This means putting each “module” in our modular system in a separate container. Containers provide a way of placing our application in a separate namespace. What this roughly means is that our containerized applications will not be able to mess with our system environment variables, modify networking settings or as an another example, use restricted disk volumes. As a bonus, containers use little system resources and are designed to be quickly deployed and run with a minimal system babysitting. Basically, we put our rambunctious kids (applications) in a tiny, little playground (containers) with tall walls (isolated namespace) which also happens to be inflatable so we can deploy it anytime (rapid, automated startup).

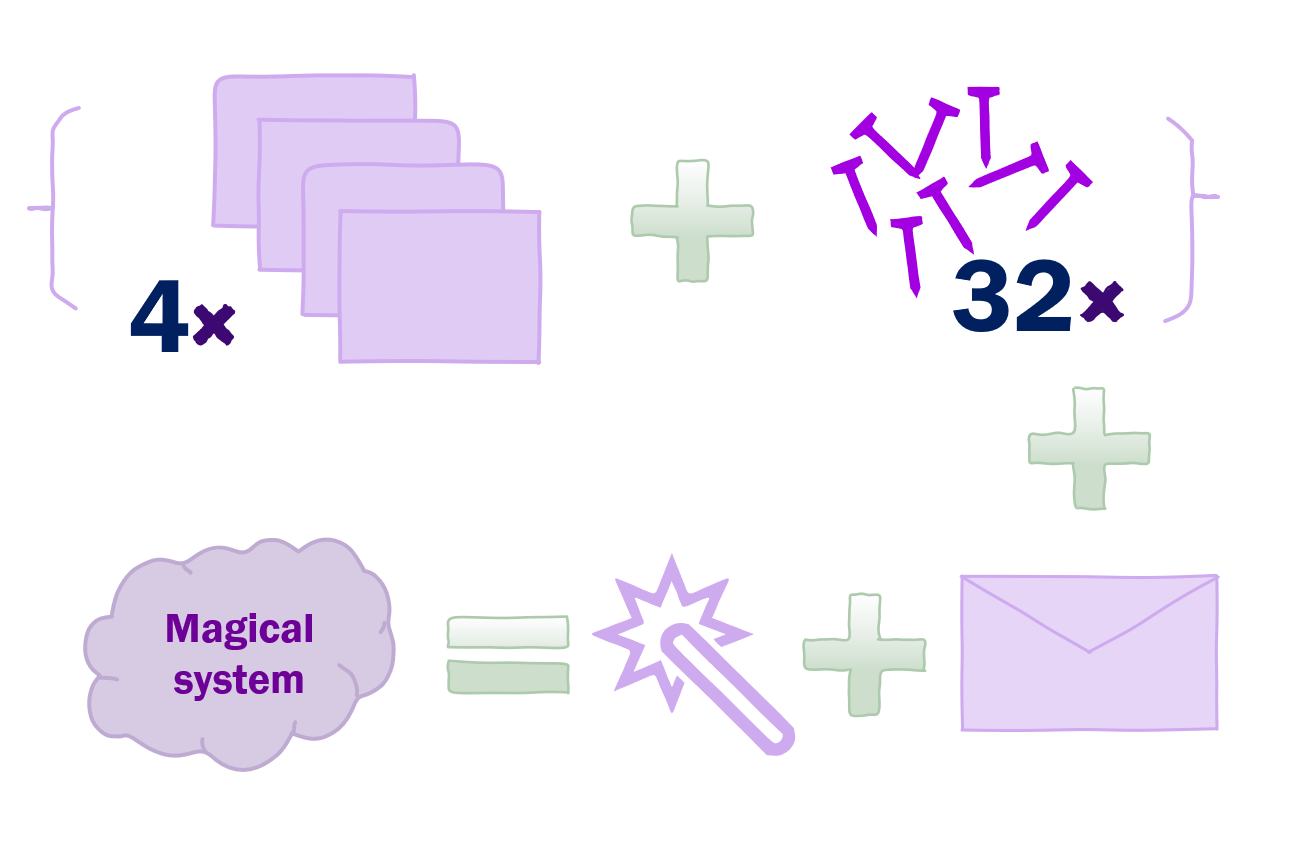

How to build a successful processing system. This useless IKEA styled diagram does not explain it. Idk, just get some containers using basic tools, add some messaging and programming magic and it might work. When it doesn’t, no refunds

Hold on a minute – we have ended up with modular architecture but hey, since everything is written in a different language, uses different standards, how can we communicate all those modules with each other? This is our hidden requirement – the seamless communication across different services and to solve that issue we use Apache Kafka. Kafka is very much like a flexible mailman-contortionist. You tell him to squeeze into a mailbox – done, oddly shaped cat-sized door? – no sweat, inverted triangle with a negative surface? – next, please. Whatever you throw at him, he will gladly take and deliver with a smile on his face (UPS® couriers please learn from that). So in programming terms, we will inject a tiny bit of code into each of our applications – remember, those cheeky rascals sit in the high security playgrounds but we are working on communicating all the playgrounds together so they can transfer toys, knives or whatever else between themselves. This tiny bit of code will be responsible for either receiving messages or sending messages via Kafka or both. Moreover, in order to tame our messy unrestrained communication we provide the schemas which will tell each application what it can send or receive and in what format it has to write or read the data.

By following the breadcrumbs we have arrived to our final draw-out of the architecture. Putting it all into a consistent framework is a matter of time, amounts of coffee and programming skills, but all in all, we have managed to come up with a decent makeup that meets our needs. Shake your hand. You’ve earned it.

I have got a name already — the FåKopp. The name roughly translates to get a cup (of coffe obviously, or scotch since we are in Edinburgh, right?) and relates to the amount of coffeine required to complete the project.

That’s all folks.

Featured image

This is a sneak-peak of an actual architecutre