Learning is gaining

We are living the real parallel computing revolution. The concern of forefront scientists has become mainstream with high performance computers and new environments.

During my first half of a time in Barcelona Supercomputing Centre (BSC) I have learned a lot of new techniques, programming models, expanded my skills in programming languages, and more. As part of my project Task based parallelization using a top-down approach with my mentor Rosa M. Badia I have learned OmpSs programming model and analysing tools Tareador, Dimemas and Paraver, which have been developed at BSC. In order to concentrate learning I was using school example codes like Multi Sort, Cholesky, Heat Diffusion, Mandel-Brot set, etc.

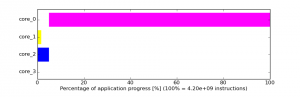

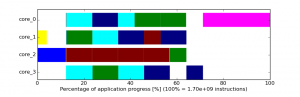

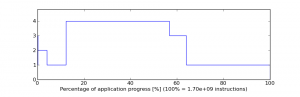

As part of analysing the codes I’ve got several different results, depending on the number of defined tasks, cores, filtering objects, etc. With the results I am able to improve specific code as sequential or parallel improvement. For example let me show a multi sort application with sorting as one task (Fig 1.) and sorting with multiple tasks (Fig. 2). As expected the multiple tasks sort executes faster than the single task sort. Furthermore multiple cores are executing tasks mixed and as we increase number of cores the execution is faster.

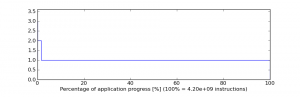

Looking at the number of active cores we can see that the first simulation executes in parallel only initialization and clear function, but not the multi sort (Fig. 3). On the other hand, second simulation executes in parallel also the multi sort part of the code (Fig. 4).

Using the environment leads to appropriate parallelization strategies and helps to identify tasks interactions that need to be guaranteed when coding the application in parallel relatively simply.

Hi Vito,

Nice post! I just have some questions:

1) For the multisort of multiple tasks, is the parallelization done for each recursive call (following a tree parallelization)?

2) Which tools did you use for the figures 1 and 2? Are they generated with Dimemas and Tareador and then visualized with Paraver?

3) What do the colors mean in figures 1 and 2?

4) How did you obtained figures 3 and 4? With options of Paraver? (can you specify?)

Thank you,

Luna

Hi Luna,

1) Yes. Every task is parallelized and can be executed by any thread.

2) Yes. But I have used online version Tareador Portal.

3) Each color represents specific task defined by thareador syntax: tareador_start_task(“name of task”); /* TASK */ tareador_end_task();

To be specific; yellow color is clear(), blue color is initialization() and all other colors are multisort() function.

4) Tasks active and Tasks ready graphs are included in online tareador portal.

Thanks for questions. 😉

Best! V.

Which sorting algorithm is used in this multisort? Is it quicksort, mergesort or even something else?

The nature of the algorithm greatly affects parallelization strategy.

Hi Marko,

it is not important which code I am working on for the purpose of learning OmpSs model and visualisation tools, that is why I did not explained the code.

Multisort is “Recursive Decomposition”. I encourage you to google it and do research on the process if you are deeply interested. 😉

Best! V.