On the finish line

The summer of HPC is coming to an end and this will be my last post. Therefore, I think this is the perfect occasion to present the results of my work on the Boltzmann-Nordheim equation and to summarize my experiences of the last two months. As mentioned in my first blog post the goal of my project was to improve the computation of the collision term in the simulation of the equation.

How to measure improvement

“Improving the computation” is very unspecific, hence we will have a closer look at what we were aiming for in our project. When running the computation of the collision term, we can measure the time the computer needs to execute the code. This is one thing we wanted to improve. Additionally, we can also have a look at the scaling of the code.

In my second post, I explained, that we want to use multiple processes of a computer to speed up the computation. When investigating the scaling of a code, we investigate how effective it is to use more processes (or “a bigger computer”) to execute the code faster.

Good vs. bad scaling

If we code a program that behaves like building Lego-Grogu as in the video, we have achieved a good scaling. Doubling the number of builders halves the building time. We expect half the execution time of a program when we double the number of processes used. We try to avoid problems, which do not have an execution time proportional to its resources. An example of such behaviour can be playing Symphony No. 1 from Beethoven. No matter how many musicians are playing it, it will always take the same amount of time.

During the Summer of HPC, I tried to improve the scaling of a part of a program, such that it scales more like the gardeners’ problem.

About communicating processes

In my project, I focussed on the term Q1q, which currently takes the longest to compute. The key idea to improve it is to use a new pattern to communicate between processes and to distribute the data in a new way between the processes. We assume, that we have MxN processes, and similar to an MxN matrix, we can split it along the rows and the columns. Moving data along the rows and columns leads to an even and quick distribution of the data. In the following, we analyze the results in more detail.

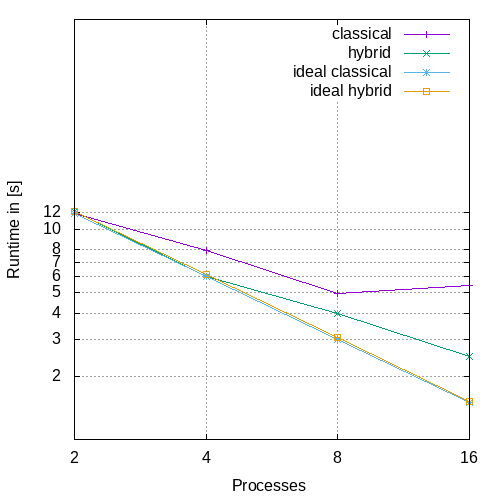

To analyze the results of two months of coding, I run two experiments! For the first experiment, I run a simulation on a grid with 64×64 grid points and initially use 2 processes. For the following tests, I double the number of processes and each time I measure the time needed to compute the term Q1q for the new (hybrid) and the old (classical) computation.

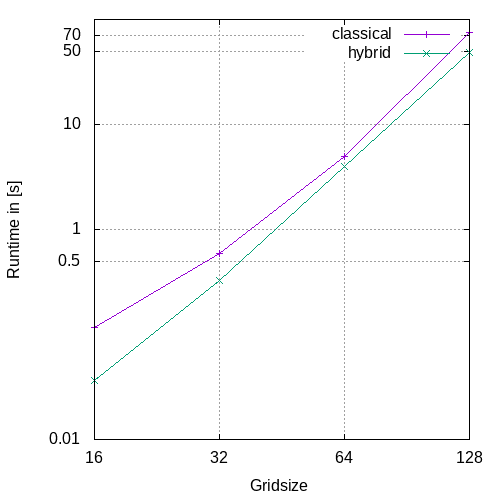

In the second test, I fixed the number of processes to 8 but increased the grid size, starting from a 16×16 grid up to 128×128. That way, we can analyze two influences. For the first, how effective the scaling of the computation is, e.g. how using more processes helps to speed up the computation. For the second test, we can see how increasing the grid size slows the computation down.

In both graphs, we can see, that the new hybrid method is an improvement of the initial version(classical). We see, that the hybrid method benefits more from using more processes than the initial version. For the two processes, the runtimes are very similar, but by increasing the number of processes we can compute the Q1q-term faster.

Having a look at the second graph, we see, that the grid size still has a huge impact on the computation, but we still manage to be faster than the classical computation.

A resumee

As this will probably be my last post, I also want to draw a personal conclusion about the Summer of HPC. For me, it was two months of intensive coding, which I enjoyed very much. I want to thank my two supervisors, Alexandre Mouton and Thomas Rey, who supported me during this project. It was a great opportunity for me to improve my skills in MPI programming and coding in general. Apart from the Coding part of the Summer of HPC, I had a lot of fun writing these blog posts and getting creative with the videos. I hope you enjoyed them as much as I did. If you are thinking about taking part in the next Summer of HPC, I can recommend it to you! It was a great experience for me.

If you want to read more about the Boltzmann-Nordheim equation, have a look at the Blog of my project Partner Artem!

Goodbye (and good luck on your project, if you are applying for Summer of HPC 2022).

Sehr interessant & gut erklärt, sehr hilfreich auch eure Videos auf Youtube! Vielen Dank dafür. Ich habe es jetzt endlich verstanden 🙂

Das freut mich sehr, dass dir die Video gefallen haben!

Very nice results, congratulations! Good luck for your future projects!

Thank you very much! All the best for you too!

These look like nice results. A significant improvement in performance/scalability and all in 2 months time.

Would be interesting to see how your new method compares on more processes!

I am happy to hear that you enjoyed the summer of HPC and am eager to learn more details from you.

Thank you very much! I agree with you, we should test it also on more processes and investigate the scaling even further! For two month of work, I am very happy about the results. I think, this is a good point, where Alexandre and Thomas can continue the work.

Wenn das mal kein erfolgreicher Abschluss ist! 🙂 Super!

Und ganz wunderbar, dass man sich alles nochmal bei YouTube anschauen kann! Ich wünsche viel Erfolg bei weiteren Projekten! 🙂

Vielen lieben Dank, das wünsche ich dir auch!

Thanks for another great explanation also with the You Tube video. It really looks like you enjoyed your “Summer of HPC”!

Yes, I enjoyed it very much and also learned a lot!

Great success, David! Getting yourself into a new project in such short time and getting good results is really a big achievement!

Thank you very much, I appreciate it! Getting into the project was indeed a huge task.

Hello David,

I really liked following your blog posts over the two months. Thank you for the good insight into your summer of HPC. You got me interested into the Boltzmann-Nordheim equation and the video explaining parallel computing was fun to watch. It’s a very good metaphor which stays in mind. I’m happy to see such good results from you in this last post. Again, I was impressed be the simpleness of your explanations for such complex topics.

Hello Annette,

thank you very much for your comment, I appreciate it very much!

It was my goal to get people interested into the topic and I am happy, that I got you interested!

Dankeschön auch für diesen letzten blog-post und auch für das Video auf youtube. Sehr informativ …

Gerne, freut mich, dass dir meine Beiträge gefallen haben!

Thank you for letting us beeing a part of your summer of HPC. It was very interesting! Wish you the best for future projects! 🙂

Thank you very much, it was a very nice summer project and it is nice to hear, that you liked it, too!

It was really interesting seeing your progress on this project in your different blogposts, the posts and videos are all really well made! My favourite part was your simple explanation of the parallelization of code with LEGO vs playing a song. Great results, congratulations!

Thank you very much! I am happy, that you like the blogposts and the videos! It was a lot of fun to make them!

Again a great post and very nice results! Hope to hear more from you about this soon! 🙂

Thank you again!

Nice resumee and a very good finish of an amazing and interesting blog. It‘s a pity that it comes to an end. Maybe we can get more of this in future? 🙂

Thank you very much for your comment! I am sure, there will be another Summer of HPC next year, you have a look at it! From my side, I hope to be visible via some publications in the future, probably not about the Boltzmann-Nordheim equation, but about Numerics and High-Performance Computing!

Awesome videos and a cool project. Thanks for the great documentation.

Thank you! For me it was also a nice and innovative way to talk about a project!