What is Hamiltonian Monte Carlo?

Generating random variables from an arbitrary distribution is a surprisingly difficult task, and Hamiltonian Monte Carlo (HMC) solves this challenge in an elegant way. Let’s consider a multivariate target distribution , with parameters

.

Most Markov Chain Monte Carlo (MCMC) methods work by generating the proposals for the next sample () from the current sample (

). For instance, a MCMC method could take a random gaussian step in parameter space to get the next proposal. The proposal is then accepted or rejected with probability determined by the probability density at the current and the proposed positions. The rejection of samples at a lower density causes the walk to “stay on track”, and for computational resources to be focused on the typical set, where the density of the target distribution is significant.

This simple MCMC method struggles with high-dimensional distributions, as the volume surrounding the typical set is much larger than the volume of the typical set, meaning most propositions are in areas of low density and are therefore rejected. This isn’t very efficient, we can reduce the step-size to increase the acceptance rate, but this causes samples to be highly correlated.

This is where HMC steps in. Each point in the parameter space is assigned a potential energy

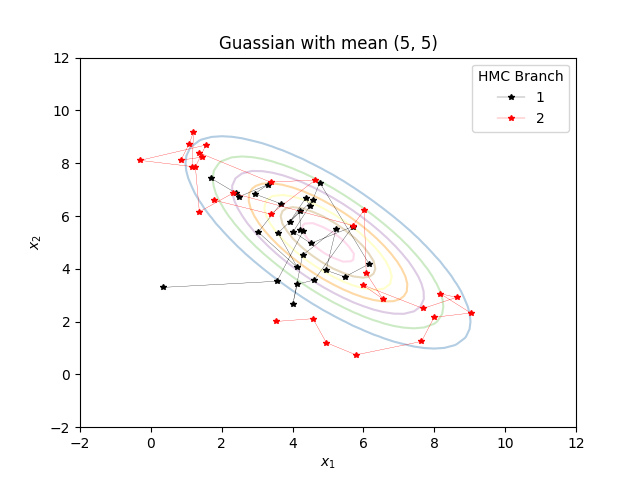

. To concretize this, image a 2D guassian distribution

. This has a bowl shaped parabolic potential energy function.

To go from one sample to the next, we give the “particle” a random momentum and simulate the evolution of the system for a certain number of time-steps. To concretize this imagine a hockey puck being given a random momentum in a large, parabolic bowl. In an ideal world, this method of generating proposals negates the need for an acceptance/rejection step, for reasons outlined in the references below. However, in reality, a small number of proposals are rejected due to numerical integration errors. Nonetheless, with a suitable time-step most proposals are accepted and samples have a lower correlation than those produced by simple MCMC.

Up until this point, Bruno and I have been working to implement and parallelize this algorithm with the help of our mentor Anton. In the next blog post I’ll talk about how we’ve been using jax, a python module which massively speeds up numpy on CPUs and GPUs.

References

Tom Begley’s Blog Post – Thanks for granting me permission to use the animation above!

M. Betancourt – A Conceptual Introduction to Hamiltonian Monte Carlo