Parallelizing the Implicitly Restarted Arnoldi Method

After a quite general introduction to the topic of lattice QCD in my last blog post, in this post I will get a little bit more technical and describe the first part of my project that I have worked on during my summer of HPC.

The computationally most expensive operation that we are dealing with in lattice QCD is the inversion of large linear systems of the form

where is a discretized form of the Dirac operator, basically a square sparse matrix of size 12 times the number of lattice sites on our lattice. As mentioned in my previous blog post, we have to handle lattice sizes with up to and more than 10 million sites, so those linear system we are dealing with are in deed large. The method that is used for the solution of those system is the conjugate gradient method. Its convergence can be accelerated using a technique called deflation, which removes components of eigenvectors with small eigenvalues from the starting vector. To use deflation, we obviously need to first compute the eigenvectors of

and my task was to optimize those computations.

The method used for the computation of the eigenvectors is the implicitly restarted Arnoldi method (IRAM). The method consists of two main steps. In the first one, a -step Arnoldi factorization of

is computed. This decomposition has the form

where is upper Hessenberg and

. The important point here is that if the residual vector

goes to zero, the

eigenvectors of

are also eigenvectors of

with the same eigenvalues. Thus, if we know the eigenvectors of

, we also know some of the eigenvectors of

. Since we are only interested in the smallest eigenvectors from the spectrum of

, we should ideally also have a way to control which eigenvectors of

enter into the spectrum of

. This can be achieved in the second step of IRAM, where

so called implicit shifts are applied to the

-step Arnoldi decomposition. This produces a

-step Arnoldi factorization from the previous

-step Arnoldi decomposition and effectively suppresses eigenvectors with eigenvalues

. Thus, if the shifts are chosen to be the

largest eigenvalues of

, the shifts can be used to remove the eigenvectors with large, unwanted eigenvalues. Those two steps are then repeated until the residual

has become sufficiently small.

An importatnt feature of the implicitly restarted Arnoldi method is that the only required operation involving the Dirac operator needed for the computation of the eigenvectors is the action

on a vector

. All other operations involve only the much smaller matrices

and

. Since the matrix

is extremely large but sparse, this allows for an efficient computation of the eigenvectors.

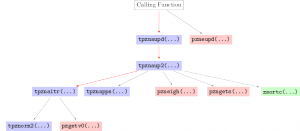

The call structure of the PARPACK library. The graph displays the subroutines involved in the computation of the eigenvectors. Functions names on a blue background are the ones that I parallelized.

The starting point for my project was an implementation written for hybrid cluster machines with one GPU on each node. The computation of the eigenvectors was parallelized over the compute nodes using the MPI implementation of PARPACK, which is a software package implementing the implicitly restarted Arnoldi method. Since the action of the Dirac operator is the computationally most expensive operation in the computation of the eigenvectors and exhibits a high degree of parallelism it is run on each nodes GPU. The number of MPI processes per node is therefore limited to one and the implicitly shifted Arnoldi method was running in serial on each nodes multiple CPUs. My task was therefore to write a thread parallel version of the method in order to fully exploit available computational resources.

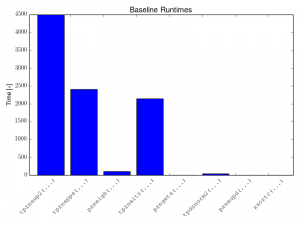

Serial runtimes for the subroutines of the PARPACK library. A significant improvement in overall performance can of course only by expected from the parallelization of subroutines in which much time is spent during serial execution.

The first step in the development of a threaded version of PARPACK was to analyze which functions are involved in a call to the library and their impact on the total execution time. From those results, the three performance critical subroutines were identified and then parallelized using OpenMP. Since the computation of the Arnoldi factorization consists mainly of linear algebra operations which are performed in PARPACK by calls to the LAPACK library, a second way to achieve parallelism is to link PARPACK to a multi-threaded implementation of LAPACK.

Performance benchmark of the manually parallelized code (solid lines) and the implementation using mutli-threaded LAPACK (dashed lines).

To see which of the two approaches yields better performance, we performed several benchmarks. The timings for the parallelized versions of the PARPACK subroutines are displayed in the figure above. They were obtained on Prometheus which is a development cluster here at the Cyprus Institute. As can be seen from the results, parallelizing the code leads to an increase in performance of up to a factor of four.

This was what I was working on during the first part of my summer of HPC. I think this project is an exciting example of how different parallel programming paradigms have to be combined to get the most out of modern hybrid cluster machines and I learned a lot about each of them in the course of the project.