Summer in Budapest

After the great starting week in Barcelona, we (me and Juraj) have flown into our Hungarian site for the rest of the summer project in the Beauty on the Danube – the city of Budapest.

Budapest

I was simply amazed by the city. Historical architecture (mainly from 19th century) with buildings with ornamental facades is present across the large area of twin city Buda – Pest. Lively wide downtown streets and squares at every corner with plenty of small shops, bakeries and cheap pubs conserve something from the atmosphere of the old times.

The unique treasure of Budapest’s beauty flows in the river Danube crowded with many ships (there are even cheap public transport lines) under six bridges, that are very nicely illuminated at night and surrounded by promenades and all the major sights visible and almost reachable from the river. Famous Danube island Sziget serves as a relax zone and hosts a well known music festival. You can enjoy thermal water in several historical baths and nice swimming pools situated in the old town. Public transport works well and you can easily survive without the knowledge of Hungarian.

The unique treasure of Budapest’s beauty flows in the river Danube crowded with many ships (there are even cheap public transport lines) under six bridges, that are very nicely illuminated at night and surrounded by promenades and all the major sights visible and almost reachable from the river. Famous Danube island Sziget serves as a relax zone and hosts a well known music festival. You can enjoy thermal water in several historical baths and nice swimming pools situated in the old town. Public transport works well and you can easily survive without the knowledge of Hungarian.

Great expectations

We were given warm reception from our Hungarian hosts. Barbara has welcomed us at the airport and has taken us to our accommodation at nice new dormitory. The next morning our supervisors Tamas and Gabor introduced us to many colleagues.

Our host organization is called NIIF = National Information Infrastructure Development Institute (in Hungarian Nemzeti Információs Infrastruktúra Fejlesztési Intézet, which I am still unable to pronounce fluently). NIIF is responsible for the development and operation of the Hungarian academic network and for maintaining High Performance Computing infrastructure. In the same building reside big internet providers and server hosting companies.

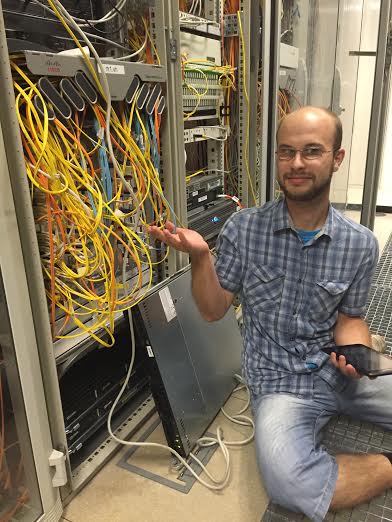

That fact implied some funny moments. On the first day we visited the underground heart of the NIIF – racks with high end routers connecting Hungary to the internet and also two NIIF’s supercomputers. Walking trough the racks and touching these blinking machines was a fascinating experience accompanied with a little glance of responsibility. In a few hours we were given our own office with twelve! network sockets and wifi. None of them have worked (reliably)! But two technicians have spent three hours to enable us the network connection . As we have seen no news about internet connection shortage in the whole country that day, we felt much better. But the wifi was still in a moody mode, working well many times but suddenly dropping connection for unknown reasons. I was curious about that strange behaviour and looking forward to complicated technical explanation from the staff, but they didn’t give me any, but placed new router in our office instead.

The nice part was the actual one gigabit per second connection to the internet from our room. And even wifi with speed over 100 Mbps. (We needed wifi for mobile devices, because our computers with GNU/Linux OS could not run specific commercial software for web meetings, that was used to communicate simultaneously with video among over twenty sites across Europe with our other Summer of HPC fellows).

A nice metaphor was placing the rack connecting people to the net in the room with the very same function – the kitchen. Therefore the kitchen was certainly the most important room for the whole department (with hot and cold drinks, full fridges and sweets from conferences it was a popular place for meetings) .

After the work with tera-flops supercomputers and terabytes of data we used to go to Tera restaurant for high speed lunch. Our expectations and resources were very high.

Work

Now I will write some words about our project – accelerating Quantum Espresso simulations with the power of GPU accelerators.

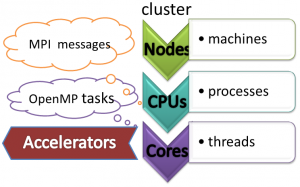

Building High Performance Computing infrastructure leads into grouping and interconnecting computing nodes which themselves contain multiple processors, each with many cores. Such clusters may contain from hundreds up to millions of cores. In the latter case, reasonable distribution of work and associated data is a challenge even for technology leaders.

Each node runs independent program processes that communicate and disribute the data via MPI messages. OpenMP governs cooperation between computation threads when processing data on levels with shared memory.

We were given an opportunity to work not with one, but with six Hungarian supercomputers and to learn some local geography as you see below.

| name | Budapest | Budapest2 | Szeged | Debrecen | Debrecen2 | Pécs |

| Type | HP CP4000BL | HP SL250s | HP CP4000BL | SGI ICE8400EX | HP SL250s | SGI UV 1000 |

| CPUs / node | 2 | 2 | 4 | 2 | 2 | 192 |

| Cores / CPU | 12 | 10 | 12 | 6 | 8 | 6 |

| RAM / node | 66 GB | 63 GB | 132 GB | 47 GB | 125 GB | 6 TB |

| RAM / core | 2.6 GB | 3 GB | 2.6 GB | 2.6 GB | 7.5 GB | 5 GB |

| CPU | AMD Opteron 6174 @ 2.2GHz | Intel Xeon E5-2680 v2 @ 2.80GHz | AMD Opteron 6174 @ 2.2GHz | Intel Xeon X5680 @ 3.33 GHz | Intel Xeon E5-2650 v2 @ 2.60GHz | Intel Xeon X7542 @ 2.66 GHz |

| GPU | – | 2 *Intel Phi / node | 2 * 6 Nvidia M2070 | – | 2 Nvidia K20x / node | – |

| Linpack performance(Rmax) | 5 Tflops | 32 Tflops | 20 Tflops | 18 Tlops | 202 Tflops | 10 Tflops |

| nodes | 32 | 14 | 50 | 128 | 84 | 1 |

The power of the accelerators lays in their ability to perform short similar routines in parallel over bigger chunks of data divided into smaller pieces. GPUs run so called kernel programs written in special language (mainly CUDA), while Intel Phi cards employ 60 cores able to accelerate standard computer code Math Kernel Library routines.

Building PRACE partner’s simulation software Quantum Espresso means compiling the source code and linking it with proper libraries. We have gotten familiar with the whole process of setting up right software environment on given platforms. We got some experience with GNU and Intel Compilers, various Open/Intel MPI versions compiled with different compilers. We have even succeeded in installing and setting up the newest Intel Composer suite.

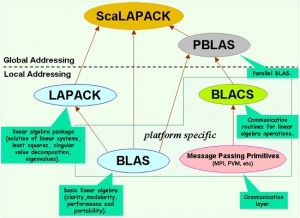

The most problematic part was linking Quantum Espresso to ScaLAPACK (ScaLAPACK stands for the whole family of parallel algebraic solvers) libraries. The built-in configuration script was insufficient, vendor’s documentation poor and online tutorials may work only on author’s configuration, because of platform specific (CPU/GPU/Intel PHI) bottom level ScaLAPACK parts. For illustration I present very simplified ScaLAPACK scheme from vendor’s page http://acts.nersc.gov/scalapack/

If you want to know, whether we succeeded, you can read more in our final report or watch the video below.