We arrived at BSC; now what?

It was only recently that I realized this experience called SoHPC is already midway through and since it has been almost an entire month that I have been silent I decided to drop a few lines in order to give a heads-up on how my time is rolling. If you want to know how it all started, just click here.

Everything started in 2014. That was the time when I transited from a schoolboy to an ambitious student (or as one might say: to an ambitious engineer-in-progress). Around 2000 km away from this transition however my current co-workers and mentors were experimenting in optimising applications that used computing systems with exotic memory architectures. Their results were and still are very promising.

Addressing to my 2014 self I feel more than obliged to explain a few technical insights that shall provide a deeper understanding of what is going to follow:

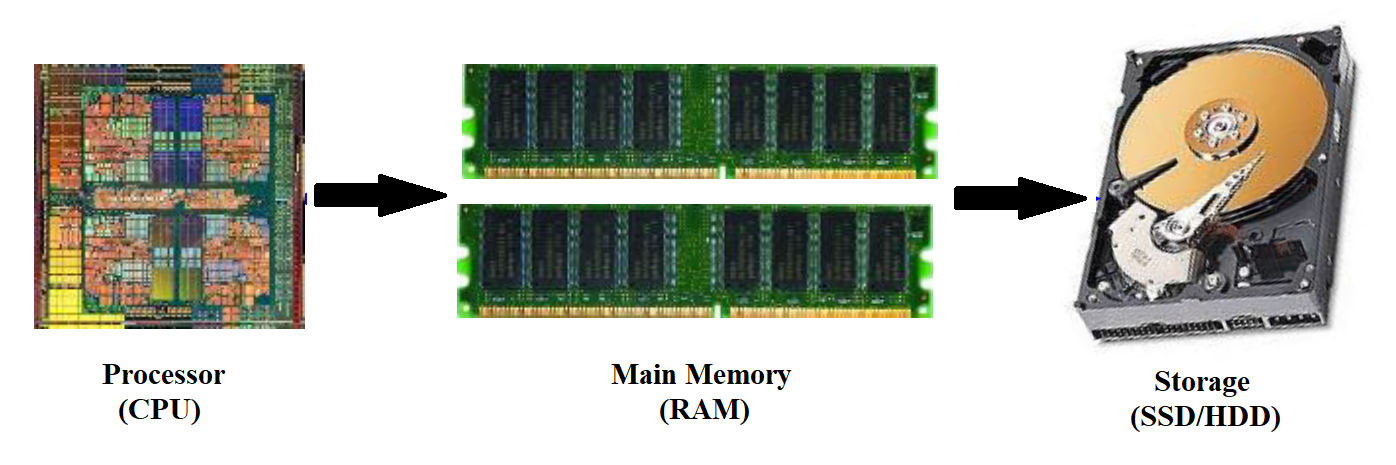

Talking about applications we are essentially refering to computer programs. In order for a program to “run” on our PC it has to store data and what could be a better place to store data if not our PC’s memory system? The most common memory systems (i.e. the ones your laptop probably has) consist of a very slow Hard Drive, which can store more or less one TB of information (thankfully the GBs era is long over) and a quite fast but relatively small (just a few GBs for a conventional laptop) Read Access Memory (RAM). In this point I have to admit that there are also even faster memory architectures present in every computer which however are not in the programmer’s control and thus shall exercise their right for anonymity for this post (and presumably for the ones that will follow). When a “domestic use” program runs, it needs data that ranges from many MBs to a few GBs and therefore it is able to transfer it to RAM where the access is rapid.

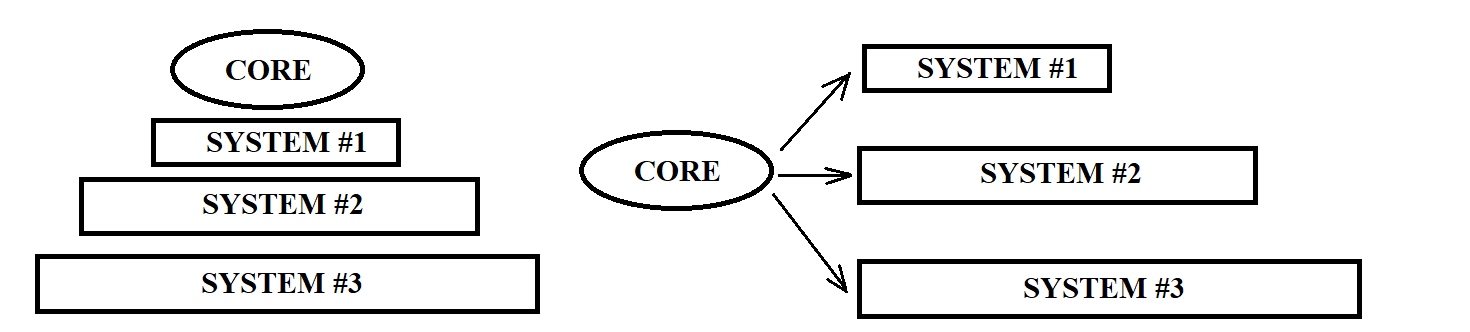

So far so good, but what happens when this “important” data jump from a handful to hundrends of GBs and the memory-friendly application becomes a memory-devourer (the most formal way would be memory-bound)? This is where things take a turn for the worse; this is where this simple memory architecture does not suffice; this is, finally, where more complicated memory levels with various sizes and lower access time come into play. These memory levels are added not in a hierarchical way but rather in more equal one. To understand the organization we could think of a hierarchical system as the political system of ancient Rome, where patricians would be fast-access memory systems that host only the data accessed first (high-class citizens) while plebeians would be slow-access memory systems that host every left data. On the other hand, a more democratic approach, such as the one of ancient Greece, would give equal oportunities to all citizens which in our case would be translated as: any memory system, depending on its size and access time of course, has the chance to host any data.

Having clarified the nature of the applications that are targeted as well as the hardware configuration needed, we can now dive into what has been going on here, at BSC. Extending various open-source tools (a following post should provide more information regarding a summary of these tools and their extensions) my colleagues managed to group the accessed data into memory objects and track down every single access to them. After instensive post-processing of the collected data that takes into account several factors such as:

1) the object’s total number of reference

2) the type of this reference (do we just want to read the object or maybe also modify it)

3) the entity of the available memory systems they managed to discover the most effective object distribution.

I have to admit that the final results were pretty impressive!

So this is what has already been done, but the question remains: Now What? Well, now is where I come into play in order to progress their work in terms of efficiency. Collecting every single memory access of every sinlge memory object can be extremely painful (at least for the machine that the experiments are run on) especially if we keep in mind that these accesses are a few dozen billions. The slowdown of an already slow application can be huge when trying to keep track of such numbers. The solution is hidden in the fact that the exact number of memory accesses is not the determinant factor of the final object distribution. On the contrary, what is crucial, is to gain a qualitive insight of the application’s memory access pattern which can be obtained successfully when performing sampled memory access identification. These results shall allow an object distribution that exhibits similar or even higher speedup than the already achieved one. At the same time however, the time overhead needed to produce these results will be minimized providing faster and more efficient ways to profile any application.

I have already started setting the foundation for my project and at the moment I am in the process of interpteting the very first experiment outcomes. Should you find yourselves intrigued by what has preceded please stay tuned: more detailed, project related posts shall follow. Let’s not forget however that this adventure takes place in Barcelona and the minimum tribute that ought to be paid to this magestic city is a reference of the secrets it hides. To be continued…

Follow me on LinkedIn for more information about me!