Job Scheduling Algorithms and The Open at Carnoustie

I’m well underway into the PRACE Summer of HPC program and my project is going well. I am working with Dr. Nick Johnson (link) on the NEXTGENIO project (link). Here is an introduction to my project.

HPC systems are expensive machines, and running jobs on them uses up precious resources and funding. The study of how jobs get allocated to different nodes could maximise the usage of these resources. The goal of my project is to explore how we can utilise our resources in an optimal way by testing different job scheduling algorithms.

When a person wants to do some work on a HPC system they are allocated some nodes on which they can run their code for whatever their purposes may be, a person may be given 8 nodes typically. To give some scale about the capacity of the HPC system called `Archer’ at EPCC, it has 4920 nodes on which users can run simulations, calculations or whatever their desire may be. Each node has 24 processors, so that’s over 100,000 processors. There are lots of jobs running on Archer all the time and also lots of requests for nodes to be allocated. So it is of vital importance to study how these nodes are allocated to different users so that we minimise the number of inactive nodes during a time slot and maximise the number of jobs performed.

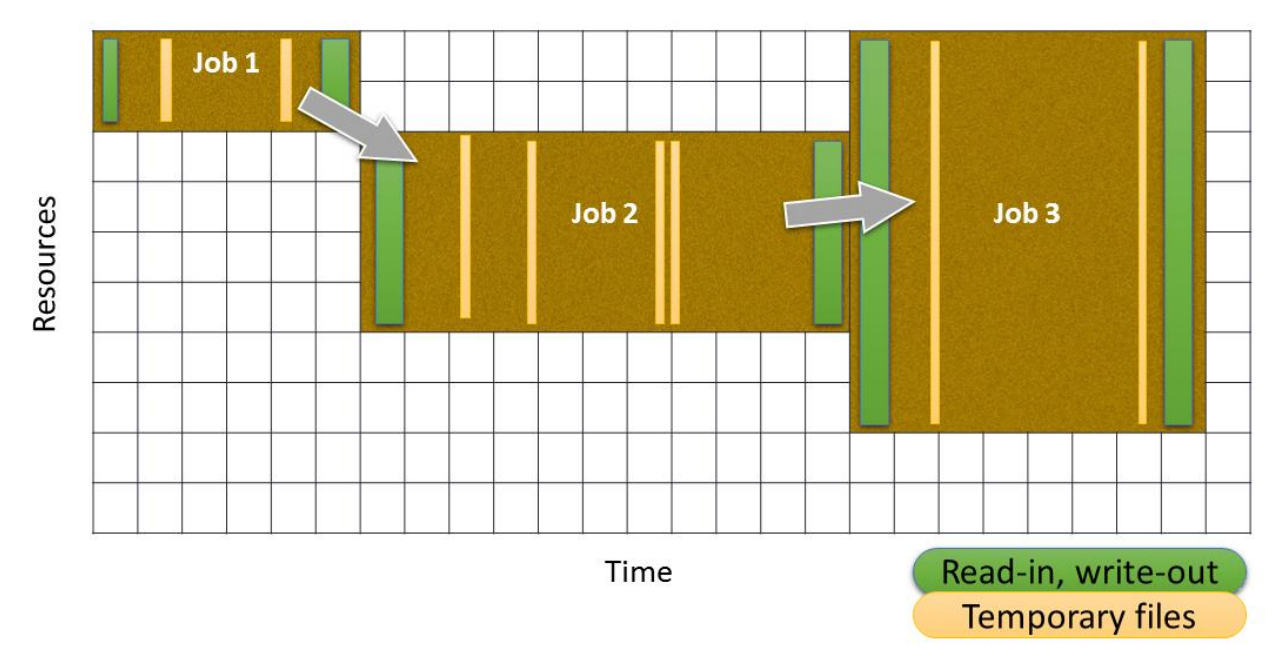

Diagram of jobs running on a HPC system. Some nodes are left vacant while other perform multiple jobs. Note how the diagram highlights the time spent Reading and Writing. This can be reduced by using NNVRAM if the same nodes are used again. This is one of the topics of investigation during this project.

Different users may request different numbers of nodes (depending on the size of their project) and different lengths of time for how long they will need them. Suppose Alice requests 100 nodes for 8 hours and then Bob requests 10 nodes for 2 hours. If we were to operate on a first-come first serve basis (a very näive approach), then both Alice and Bob must wait for the 100 nodes to become free before Alice can run her jobs and then Bob can run his. However, suppose 20 nodes were free the whole time and Alice must wait more than 2 hours before 100 nodes are free to begin her job. A more efficient algorithm would allow Bob to skip Alice in the queue and let him perform his job on these nodes that she can’t use. He will not be delaying the time at which she begins her jobs as she will still be waiting for the other 80 of the 100 nodes to become free when Bob is finished. This is a very simple two job example to demonstrate how we can have 2 different job scheduling algorithms. Clearly the second algorithm will reduce the overall time to perform the two jobs in this situation.

This is a simple case, but it serves as an example to see the importance in job scheduling on a HPC system, otherwise queues for jobs could be days long. If you’d like to learn more, here is a paper I’ve been reading about it (link).

Free time

I’m a very keen golfer, having represented my university for 4 years, and Captaining the team. Being in Scotland, the glorified home of golf, it is pretty special, especially at this time of year when the Scottish and British Opens are on back to back weeks and in close proximity to Edinburgh. So it was on my agenda to make sure I went to one of golf’s 4 majors, the Open at Carnoustie golf club. My dad flew in and we made a trip of it. Seeing Tiger Woods return to some of his old form, McIlroy hit Driver-Sand Wedge to a par 5, Jason Day hit a wedge stone dead to a foot and the eventual winner Francseco Molinari were the standout moments. A great day, to get the head cleared after a tough week of coding.

Me at the British Open

The Iconic 18th hole, where tournaments are won and lost; just ask Molinari and Van de Velde.