Deep emotion recognition

A month has passed since my stay in IT4Innovations started. Apart from working on my project, which I will comment on below, I’ve been spending my weekends exploring Ostrava and some nearby cities.

The first weekend started with a bang at Colours of Ostrava, where tons of famous bands like The Cure and Florence + the Machine performed at one of the most important music festivals in the Czech Republic. It took place in an old factory, an amazing location full of pipes, huge rusty towers and iron furnaces.

Another highlight of these weekends has been meeting up with fellow summer of HPC members in Vienna. We stayed for less than two days. We saw an awful lot of palaces, music-related museums (like Mozart’s house) and beautiful gardens. I left eager to come back and continue exploring a city that’s full of history and stories.

Anyways, a month working on my project has given me time to explore and learn a lot about the topic I’m working on. It took a while to get used to all the technology I had to learn, like how to deploy apps on the Movidius Compute Stick or understanding how to use the deep learning frameworks –Keras and Tensorflow.

However, I now feel confident to explain what I’ve been doing, so I will start by giving an overview of the problem I’m trying to solve here, as it drifted away from the original proposal.

I originally was going to address the problem of navigation of urban spaces for blind individuals. In more detail, I was going to train a deep neural network to perform object detection on common obstacles that a visually impaired individual might encounter.

However, after looking deeper into the problem, I found that a big issue that blind individuals face is interacting with newly met people in the street.

None verbal language is a huge part of any social interaction and it can be hard to understand the intent of someone we just met from only the tone of their voice.

So I wondered, what if I could come up with a model able to identify someone’s facial expressions from a video stream? This would make up for a missing visual context and enhance communication for visually impaired individuals.

Once I decided on the new problem I wanted to solve I started looking into the literature of emotion recognition. A relatively naive approach and the one I started with was to train a neural network to classify images based on the emotions represented in them. A classical multiclass classification problem. Most of the datasets available provided images classified on the main 7 emotions: anger, fear, disgust, happiness, sadness, surprise and content. The main issue was the variability of each emotion across different subjects and the lack of specificity in the emotions described. Two facial expressions both identified as happy could be dramatically different. This not only made it quite challenging for the neural network to understand the common patterns underlying facial expressions but was also quite limiting in terms of emotion variability.

Thus, after doing some further reading, I found a much more optimal solution. A perfect way to facilitate the training process, while allowing for more variability in the emotions and without increasing the complexity of the problem.

In 1970, Carl-Herman Hjortsjö came up with a way to encode the most common muscle movements involved in human facial expressions using a Facial Action Coding System (FACS). These muscle movements are called Action Units, and combined action units create facial expressions that can be identified with specific emotions.

To be able to predict the action units present in a facial expression I will be using a deep neural network.

A very common practice used in the domain of neural networks and computer vision is transfer learning. Transfer learning consists of using part of an existing neural network that has been trained to solve a particular problem and modifying certain layers so it’s able to solve a similar but different problem. In the context of image understanding, what you expect to get from the network you are transferring from is the ability to find patterns and features in images. Because these models are used to classify natural images, there are a lot of features common to these, like edges, surfaces and shapes.

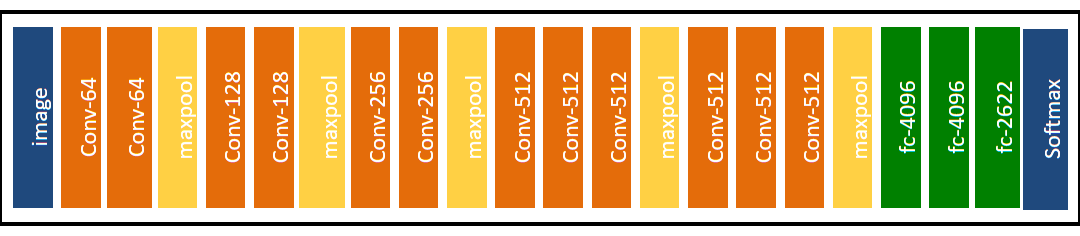

In my case, I’ll be doing transfer learning from a very popular convolutional neural network called VGG16, proposed by K. Simonyan and A. Zisserman from the University of Oxford. More specifically, I’ll be using a version of this convolutional neural network that was trained to detect faces in images.

So, I will use part of this network as a feature detector, and on top of that, I will add a few layers that will work as a classifier for the features identified by the lower layers. The classifier’s job will be to predict the Action Units (or set of muscle movements) present in the image. From that, I will layer another classifier, either another neural network or a simpler clustering algorithm. This classifier will predict the emotion underlying the facial expressions given the set of action units.

I hope this served as a general introduction to my project. Stay tuned for the next post, which will come up pretty soon and will go into detail regarding how I chose and preprocessed the data to train the model and how exactly I modified VGG16 to fit my target problem.