Goodbye, GASNet!

All the world’s a stage, and all the men and women merely players: they have their exits and their entrances…

William Shakespeare

I admit this is a bit pathetic, but maybe it’s the end of these great two months which makes me a bit emotianal! However, I think one can describe this summer like a three-act drama, and the acts are represented by my blogpost:

- The first one was the introduction, where you got to know all important characters of the play.

- The second one was the confrontation, where I had to fight against the code, to get him work!

- And this is the third one, the resolution, where I’ll reveal the final results and answer all open questions (Okay, probably not all, but hopefully many).

Okay, to be honest, the main reason for this way seeing it is that I can cite Shakespeare in an HPC blog post which is quite unusual, I believe! But there is some truth in it…

Now it’s time to stop philosophizing and start answering questions. To simplify things, I will summarise my whole project to one question:

Is GASNet a valid alternative to MPI?

Allow me one last comment before finally showing you the results: I must mention that what we’ve done here by replacing MPI with GASNet was quite an unusual way of working with GASNet’s active messages. We don’t make really use of the “active” nature of GASNet, so probably one could redesign the applications in a way that they make better use of them. But our goal was to find a simple drop-in replacement for MPI. So if the answer of the above question will be no (spoiler-alert: it will), then I mean just that specific use-case, not GASNet in total!

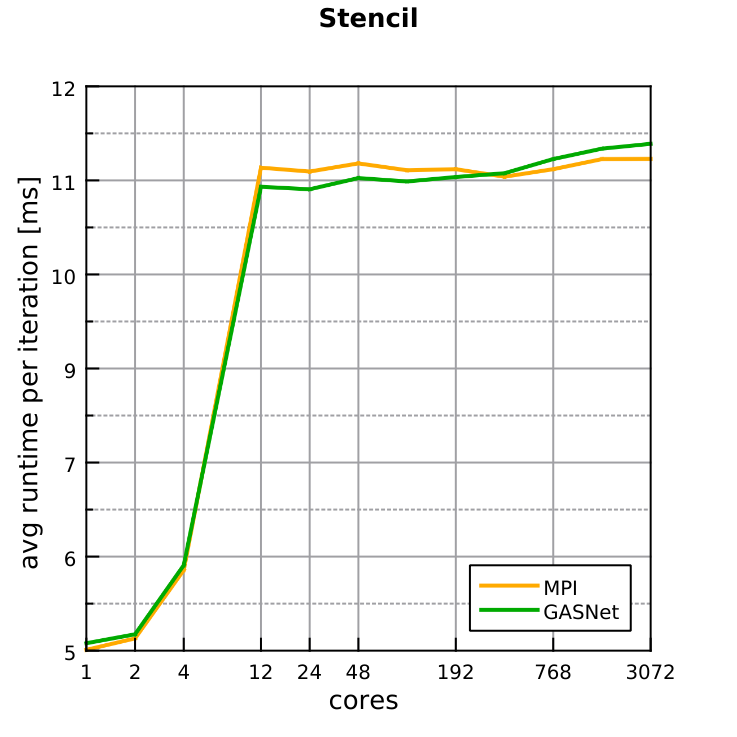

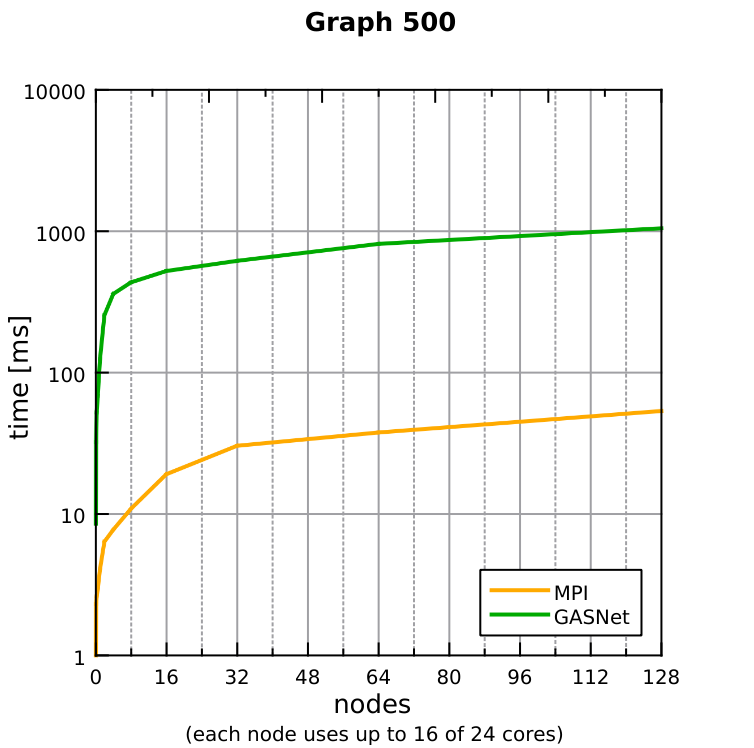

Below you see two plots of the performance of two very different applications:

I won’t explain all the details about these applications here, for this check-out my video or my final report. There are only to things I want to point out here:

- The scaling-behaviour: On the x-axis, you see in both plots the number of cores (one time with log-scale, one time linear). If we now increase the computing power by the number of cores, we have to decide, if we want to change the amount of work to do for the application:

- If we don’t change the amount of work, the runtime will decrease hopefully. In the best case twice the number of cores result in half the runtime. Here we can see, if our application scales good. This is called strong scaling.

- If we change the amount of work in the same way as the cores, the time should stay constant. If it does not, it is a sign, that the underlying network is causing problems. This behaviour you can see above, in the left plot the time is pretty constant, in the right one it increases a bit at the end.

- The communication-pattern: this is what we call the kind and number of messages a program sends. The stencil usually sends few, but big messages whereas the graph500 many, but small ones. And here we see the big performance difference: In the first case, MPI and GASNet are almost equal which is totally different in the second one. We assume, the reason for this are differences in the latency. This means that GASNet simply needs longer to react on a message-send which has much more impact with many, small messages.

However, GASNet is not able to beat MPI in one of these cases, so in the end we must conclude: It is not worth to use GASNet’s active messages as a replacement for passive point-to-point communication via MPI. But that doesn’t mean, there are no cases, in which GASNet can be used. For example, according to the plots on the official website the RMA (Remote memory access) component of GASNet performs better.

But it is not like the project failed, because of these results. Besides the fact, that I learnt many new things and feeling now very familiar with parallel programming, these results could also be interesting for the whole parallel computing community! So I’m leaving with the feeling, that this wasn’t useless at all!

Goodbye, Edinburgh!

Unfortunately, it’s not just my time with GASNet which is ending now, but also my time in Edinburgh. I really fell in love with this city in the last weeks. And it was not so much the Fringe (which is very cool, too), but more the general atmosphere. We also discovered the environment around the city in the last weeks, it is such a beautiful landscape!

I’m really sad, that this time is over now, but I’m very grateful for this experience. I want to thank all the people who made this possible: PRACE and especially Leon Kos for organising this event, Ben Morse from EPCC for preparing everything in Edinburgh for our arrival, my mentors Nick Brown and Oliver Brown for this really cool project, and last but not least all the awesome people I spent my time with here, mainly my colleagues Caelen and Ebru, but also other young people like Sarah from our dormitory! Thank you all!